DeepSeek-V3.1 Is Live on SambaCloud

DeepSeek-V3.1 Is Live on SambaCloud

August 25, 2025

Supercharging AI Agents with Function Calling on DeepSeek!

%20Ideation.jpeg)

%20Ideation.jpeg)

Supercharging AI Agents with Function Calling on DeepSeek!

August 19, 2025

LLM-Judge for Multilingual Document Question Answering

LLM-Judge for Multilingual Document Question Answering

April 2, 2025

Open-Source Deep Research Agents: Enterprise-Grade Speed, Security & Saving them Millions

Open-Source Deep Research Agents: Enterprise-Grade Speed, Security & Saving them Millions

March 10, 2025

SambaNova Cloud Launches the Fastest DeepSeek-R1 671B: Sign Up for Early Access

SambaNova Cloud Launches the Fastest DeepSeek-R1 671B: Sign Up for Early Access

February 13, 2025

SambaNova Cloud Developer Tier Is Live

SambaNova Cloud Developer Tier Is Live

February 8, 2025

Now On SambaNova Cloud, Tülu 3 405B, A New Model Better than DeepSeek V3

Now On SambaNova Cloud, Tülu 3 405B, A New Model Better than DeepSeek V3

February 4, 2025

Hugging Face Partners with SambaNova to Supercharge its Inference API Capabilities

Hugging Face Partners with SambaNova to Supercharge its Inference API Capabilities

January 28, 2025

Multi-Agent AI Workflows with CrewAI on SambaNova

Multi-Agent AI Workflows with CrewAI on SambaNova

January 15, 2025

Test-Time Compute Available on SambaNova Cloud with Qwen QwQ-32B-Preview

Test-Time Compute Available on SambaNova Cloud with Qwen QwQ-32B-Preview

December 18, 2024

Meta Llama 3.3 70B Now Available Today for Developers and Enterprises

Meta Llama 3.3 70B Now Available Today for Developers and Enterprises

December 11, 2024

The SambaNova Startup Accelerator: Helping AI Innovators Realize Their Vision

The SambaNova Startup Accelerator: Helping AI Innovators Realize Their Vision

December 10, 2024

Qwen 2.5 32B-Coder Available on SambaNova Cloud - 5X Faster than GPUs

Qwen 2.5 32B-Coder Available on SambaNova Cloud - 5X Faster than GPUs

December 6, 2024

Hugging Face Makes it Faster to Review Papers with SambaNova

Hugging Face Makes it Faster to Review Papers with SambaNova

December 4, 2024

How Gradio Makes Building Apps on SambaNova Cloud Super Easy

How Gradio Makes Building Apps on SambaNova Cloud Super Easy

December 4, 2024

Zilliz: Powering AI RAG Applications with Vector Embeddings

Zilliz: Powering AI RAG Applications with Vector Embeddings

December 3, 2024

Outperforming GPT-4o with Llama 3 8B: Domain Specific Fine Tuning for RAG

Outperforming GPT-4o with Llama 3 8B: Domain Specific Fine Tuning for RAG

November 20, 2024

Correcting Common AI Benchmarking Errors with AI Starter Kits

Correcting Common AI Benchmarking Errors with AI Starter Kits

November 11, 2024

Accelerating Coding with SambaNova Cloud

Accelerating Coding with SambaNova Cloud

October 10, 2024

Developer Tips: Creating Valuable AI

Developer Tips: Creating Valuable AI

October 3, 2024

Judging Judges: All that is LLM Judgements does not glitter

Judging Judges: All that is LLM Judgements does not glitter

September 19, 2024

Replacing the Judge: Can Llama 405B Outperform GPT4 in the Court of AI?

Replacing the Judge: Can Llama 405B Outperform GPT4 in the Court of AI?

September 19, 2024

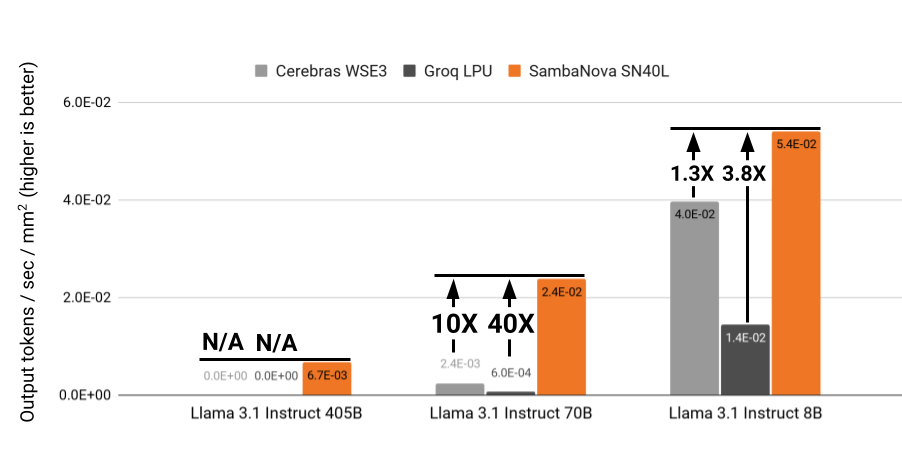

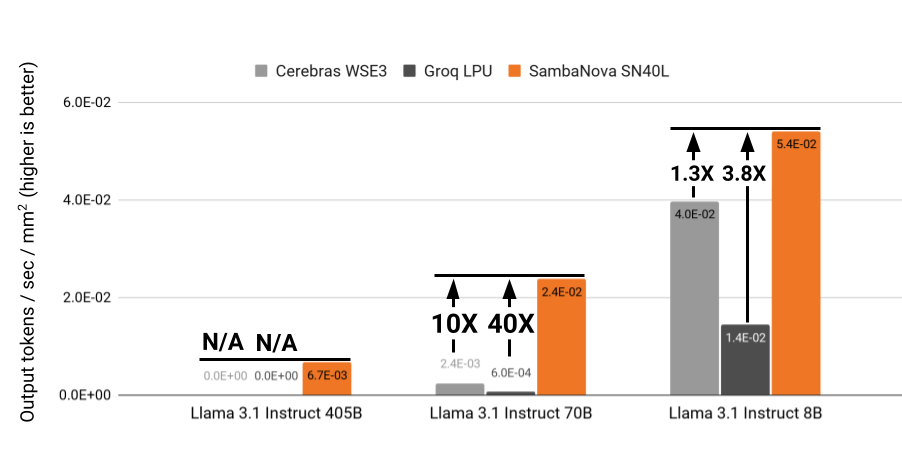

Advanced AI Apps Need Fast Inference. SambaNova Cloud Delivers It

Advanced AI Apps Need Fast Inference. SambaNova Cloud Delivers It

September 10, 2024

Why SambaNova's SN40L Chip is The Best for Inference

Why SambaNova's SN40L Chip is The Best for Inference

September 10, 2024