SambaNova is excited to open source a collection of expert models that adapt Llama 2 [12] to a diverse set of 9 languages. The SambaLingo language expert models achieve state of the art results on multi-lingual quantitative and qualitative benchmarks when compared to open source multilingual model baselines such as XGLM-7B [44], BLOOM-7B [45], mGPT-13B [46] and even expert models such as Jais-13B [36] and ELYZA-7b [33]. SambaNova is releasing two variants for each model: a base pretrained checkpoint and a chat version of the checkpoint, which aligns the model with human preferences data using Direct Preference Optimization (DPO) [2]. These Arabic, Thai, Turkish, Japanese, Hungarian, Russian, Bulgarian, Serbian and Slovenian language experts can be found on SambaNova’s Hugging Face page or linked directly in Appendix A.

SambaNova is excited to open source a collection of expert models that adapt Llama 2 [12] to a diverse set of 9 languages. The SambaLingo language expert models achieve state of the art results on multi-lingual quantitative and qualitative benchmarks when compared to open source multilingual model baselines such as XGLM-7B [44], BLOOM-7B [45], mGPT-13B [46] and even expert models such as Jais-13B [36] and ELYZA-7b [33]. SambaNova is releasing two variants for each model: a base pretrained checkpoint and a chat version of the checkpoint, which aligns the model with human preferences data using Direct Preference Optimization (DPO) [2]. These Arabic, Thai, Turkish, Japanese, Hungarian, Russian, Bulgarian, Serbian and Slovenian language experts can be found on SambaNova’s Hugging Face page or linked directly in Appendix A.

While large language models such as Llama 2 have gained widespread popularity, there remains a wide gap in their capabilities between English and other languages. To combat this, models like BLOOM [42], XGLM [43], and AYA [6] have been trained to be multilingual; however, their performance in other languages still falls short of the state-of-the-art standards. Consequently, the majority of the world is left without access to high quality open source AI models in their native tongue. This work shows that English centric language models such as Llama 2 can be adapted to any new language and outperform all existing open source multilingual models on a majority of benchmarks. Additionally, we develop a recipe for aligning the adapted checkpoints for effective responses to user queries in the adapted language, leveraging human preference data. Our results demonstrate a preference for our models' responses over open-source alternatives, and we welcome everyone to try these models by visiting SambaLingo-chat-space.

Evaluation

We measure the models’ capability on the new languages with a mix of canonical multilingual NLP benchmarks, including evaluation perplexity, translation, question answering, text classification, and natural language understanding. We do not include AYA-101 [6] as a baseline in these benchmarks because it is an instruction tuned checkpoint, and many of the benchmarks are contaminated in its training data. Also, to test the English capability of the model after bilingual training, we evaluate the models on OpenLLM Leaderboard [23]. Lastly, for the chat version of the models, we test their ability with prompt datasets written in the native language and use GPT-4 as a judge.

Quantitative Evaluation

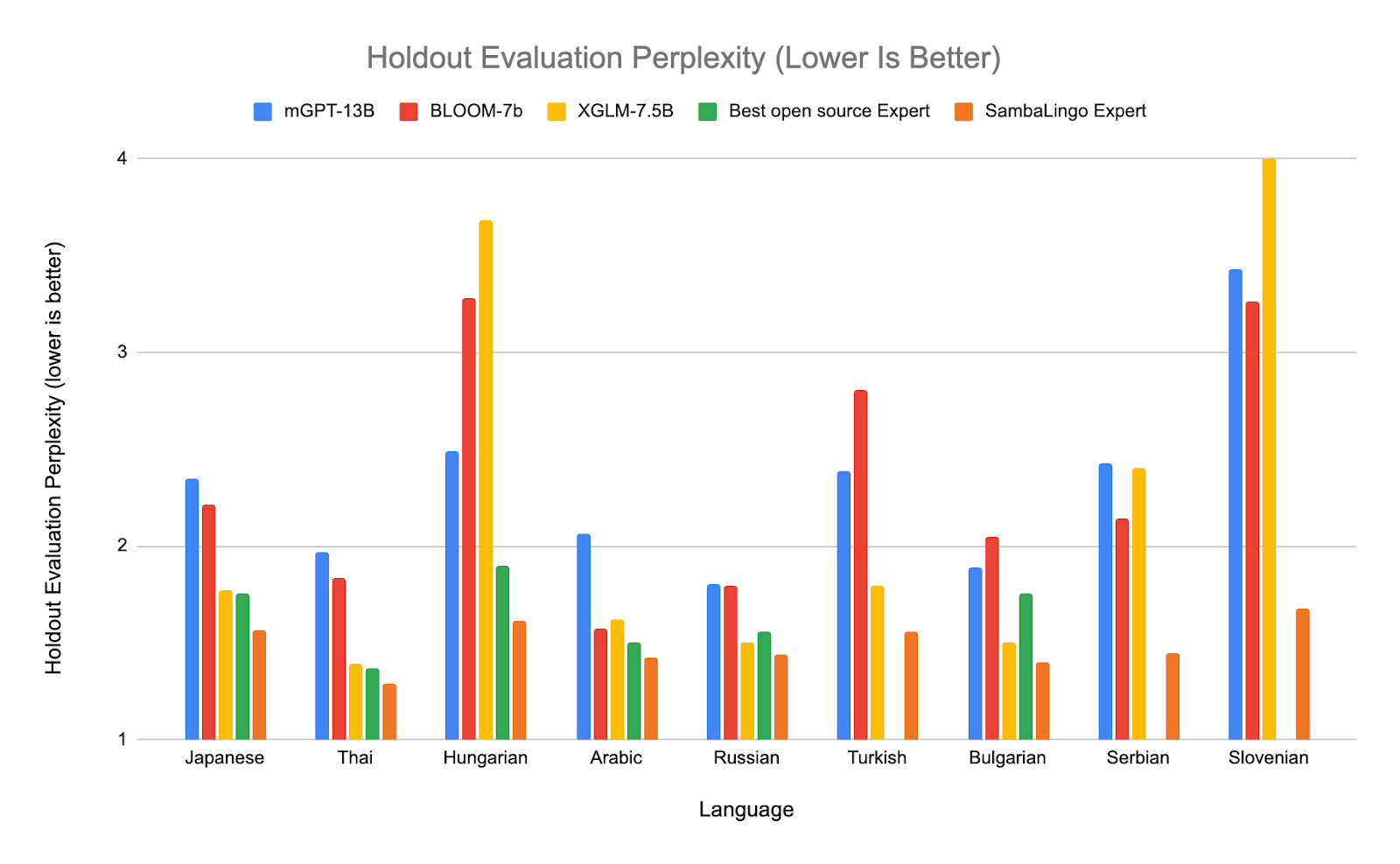

Evaluation Perplexity

We report the “perplexity” on a holdout set of training text, Wikipedia [21] and a random sample of MC4 [22]. Evaluating perplexity on all three datasets has approximately the same result, below we show the perplexity on the holdout training data. All evaluation is done with EleutherAI’s lm-eval-harness [13]. Our models achieve state of the art perplexity compared to every open source baseline.

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

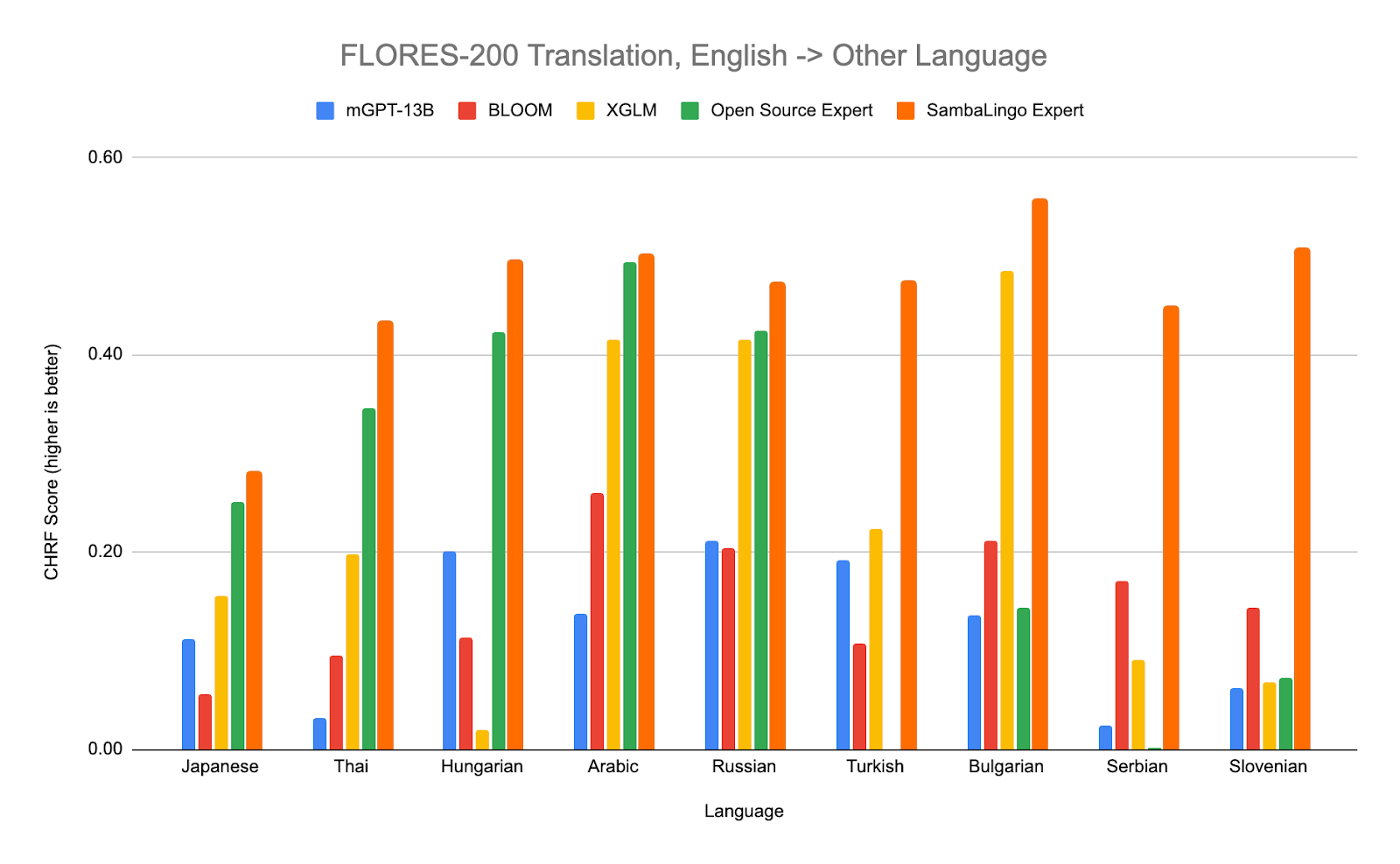

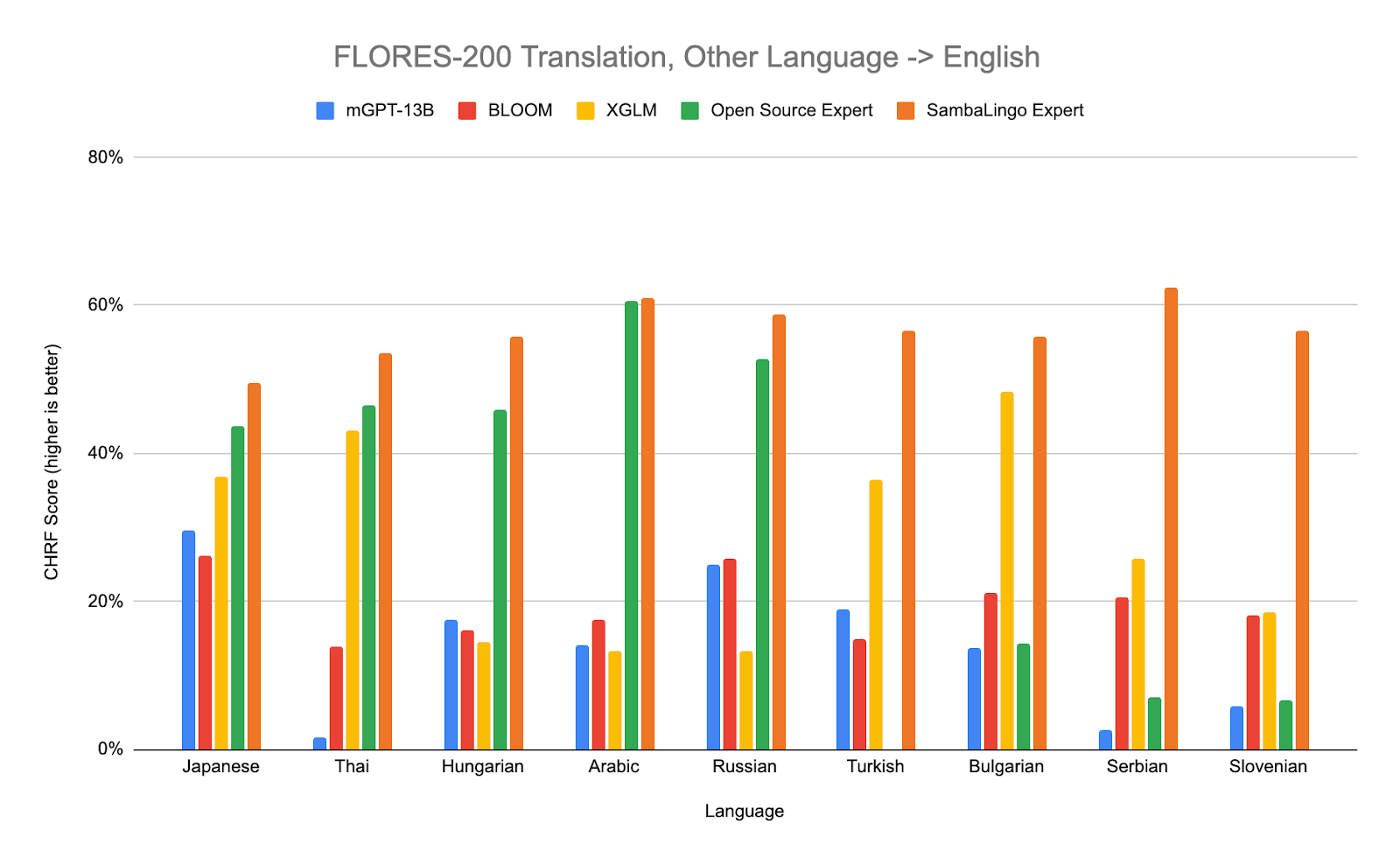

Translation - FLORES-200

All SambaLingo expert models are bi-lingual (English and the expert language), and they exhibit state of the art performance on translation tasks. We evaluate our base pretrained checkpoints on the FLORES-200 dataset [15] with 8 shot evaluation using the ‘{IN}={OUT}’ prompt as recommended by Zhu et al [14].

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

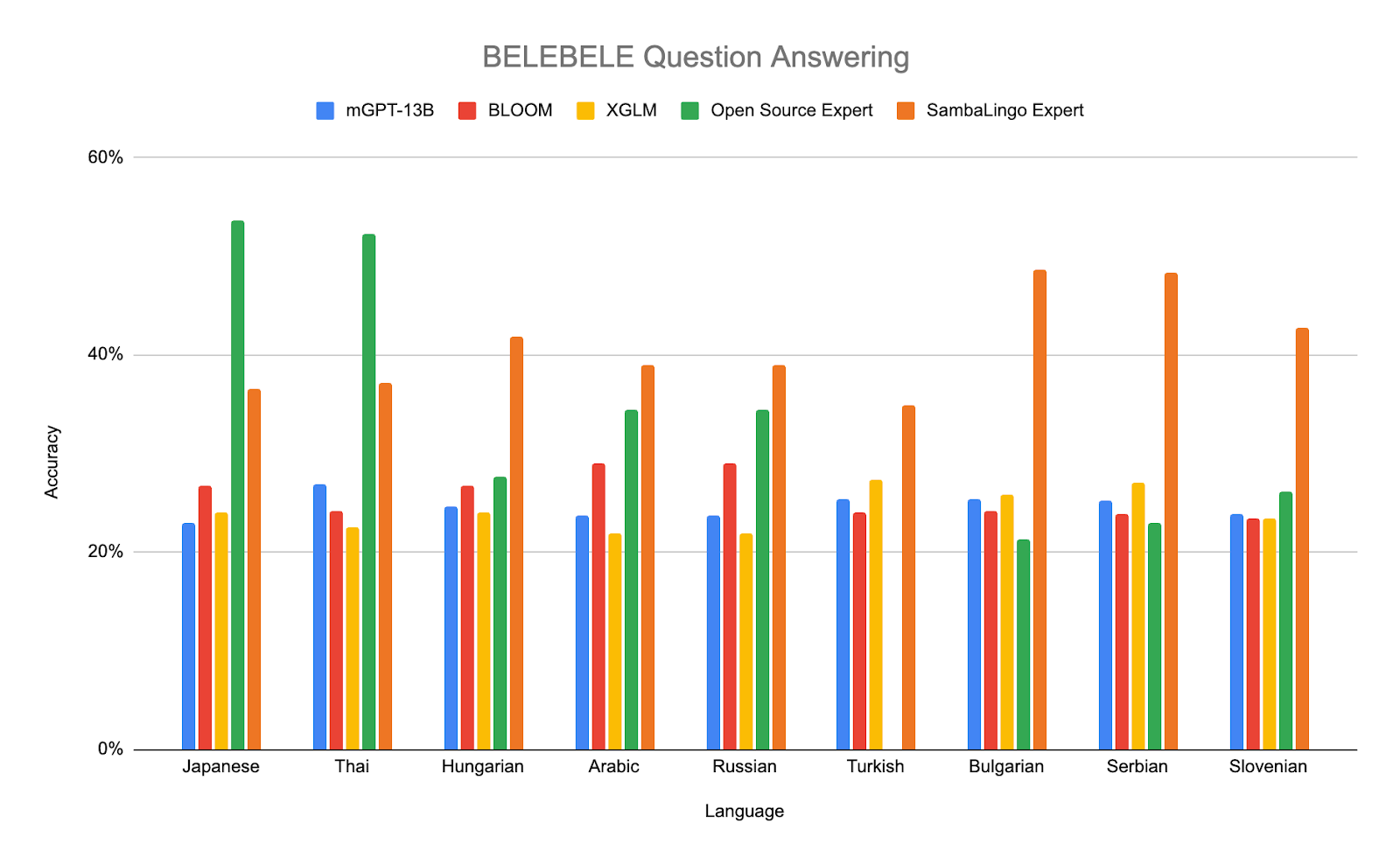

Multiple Choice Question Answering - BELEBELE

We measure the model's ability to answer multiple choice questions using the BELEBELE dataset [16]. We perform 3 shot evaluation on our base checkpoints with the default prompt from the BELEBELE github repo [17] and select the answer with the lowest perplexity.

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

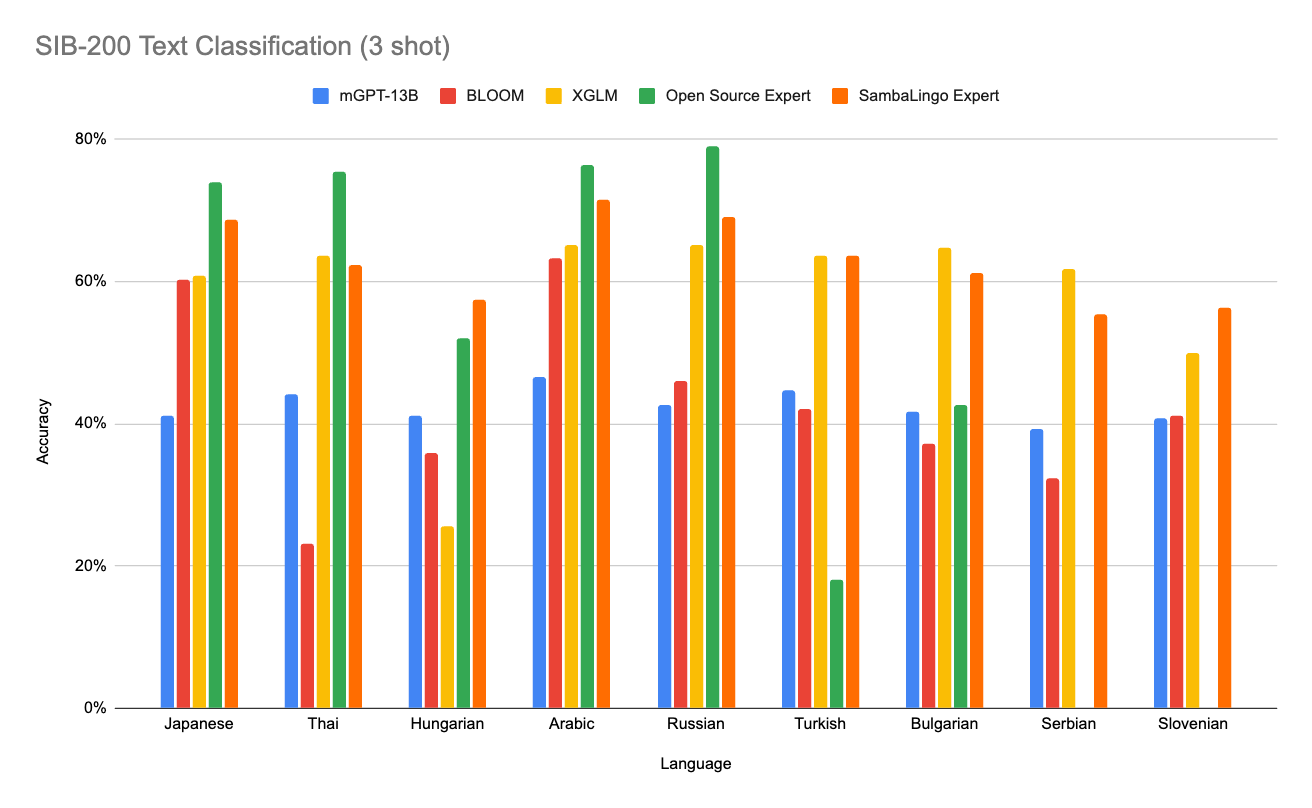

Text Classification - SIB-200

Text classification is a task that asks the model to categorize a piece of text. We evaluate the base pre-trained language experts on the SIB-200 benchmark using the prompt recommended by Lin et al [18] with a 3 shot context and select the answer with the lowest perplexity.

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

Natural Language Understanding - XNLI, XWinograd, PAWS-X, XCOPA, XStoryCloze

Natural language understanding helps show the model's ability to grasp the meaning, context, and nuances inherent in human language. We evaluate using XNLI [47], XWinograd [49], PAWS-X [51], XCOPA [48], XStoryCloze [43] multilingual benchmarks from lm-eval-harness [13] with 0 shot context.

| mGPT-13B | BLOOM | XGLM | Open Source Expert | SambaLingo Expert | |

| Arabic | 0.516 | 0.585 | 0.562 | 0.633 | 0.662 |

| Russian | 0.594 | 0.527 | 0.562 | 0.690 | 0.717 |

| mGPT-13B | BLOOM | XGLM | Open Source Expert | SambaLingo Expert | |

| Japanese | 0.578 | 0.589 | 0.650 | 0.776 | 0.766 |

| Russian | 0.600 | 0.571 | 0.632 | 0.667 | 0.692 |

| mGPT-13B | BLOOM | XGLM | Open Source Expert | SambaLingo Expert | |

| Thai | 0.528 | 0.554 | 0.594 | 0.606 | 0.614 |

| Turkish | 0.568 | 0.512 | 0.584 | 0.558 | 0.694 |

| mGPT-13B | BLOOM | XGLM | Open Source Expert | SambaLingo Expert | |

| Japanese | 0.452 | 0.454 | 0.520 | 0.505 | 0.468 |

| mGPT-13B | BLOOM | XGLM | Open Source Expert | SambaLingo Expert | |

| Thai | 0.392 | 0.349 | 0.437 | 0.430 | 0.447 |

| Arabic | 0.334 | 0.338 | 0.334 | 0.363 | 0.336 |

| Russian | 0.454 | 0.426 | 0.334 | 0.498 | 0.353 |

| Turkish | 0.387 | 0.350 | 0.462 | 0.384 | 0.339 |

| Bulgarian | 0.458 | 0.394 | 0.449 | 0.338 | 0.428 |

Open Source Expert Baselines: Japanese: ELYZA-japanese-Llama-2-7b [33], Thai: typhoon-7b [34], Arabic : jais-13b [36], Hungarian: NYTK/PULI-GPTrio [35], Russian: saiga_mistral_7b_merged [37], Turkish: TURNA [38], Bulgarian: mGPT-1.3B-bulgarian [39], Serbian: sr-gpt2 [40], Slovenian: sl-gpt2 [41]

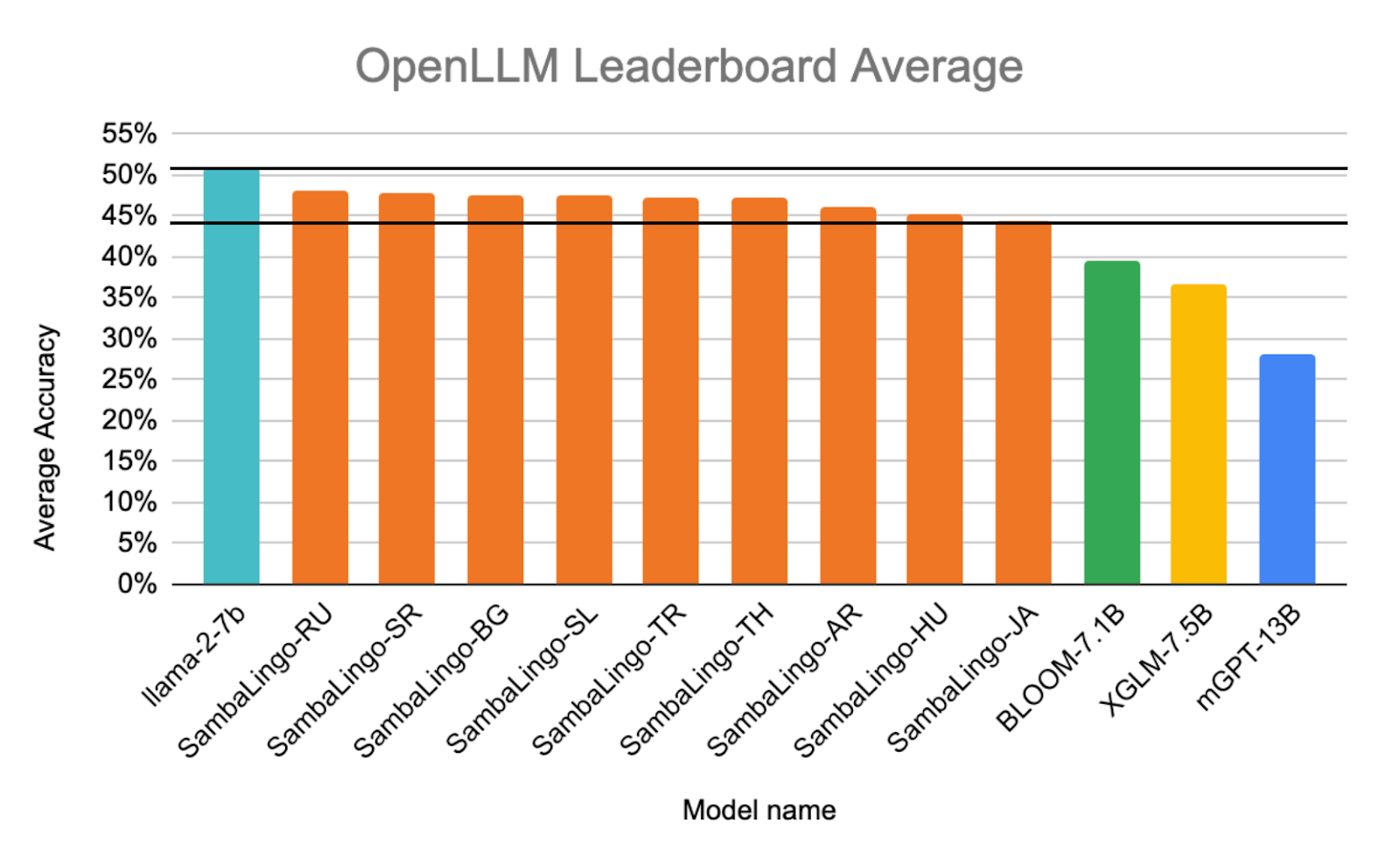

English - OpenLLM Leaderboard

In order to test how our models retrain their English capabilities after being adapted to new languages, we evaluate them on the standard OpenLLM leaderboard benchmarks [23]. We followed the same few-shot numbers in the OpenLLM leaderboard repository. We find that there are regressions on our model compared to base Llama, but our models still retain their ability to outperform existing multilingual model baselines in English.

Qualitative Evaluation

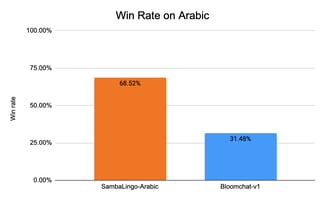

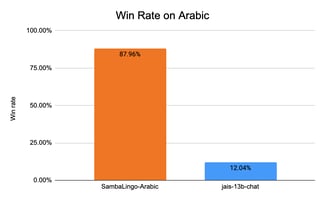

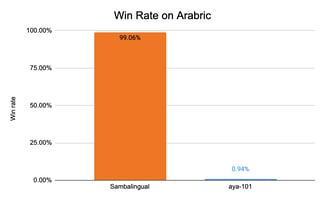

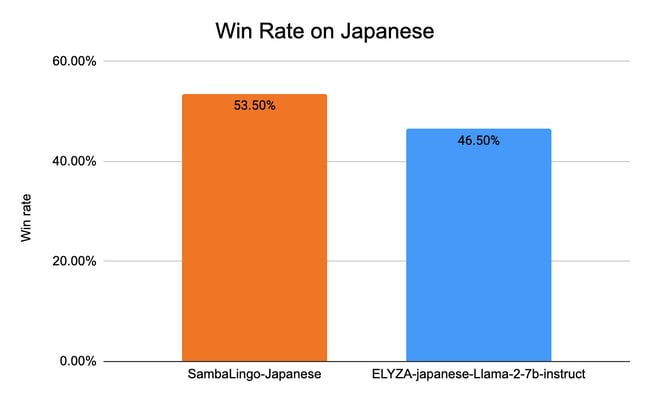

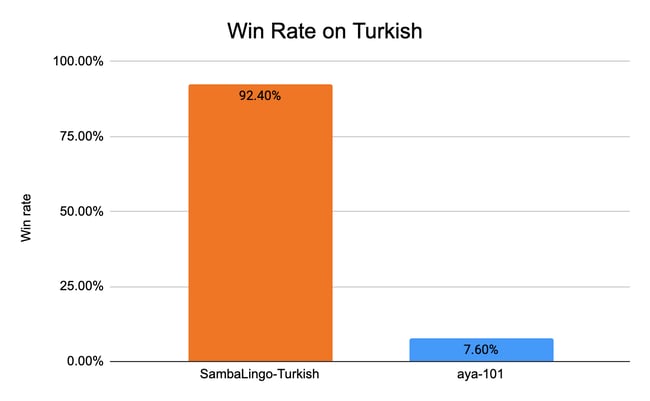

In order to test SambaLingo-Chat’s ability to generate high quality responses to real user prompts, we measure the win rate with GPT4 as a judge [19][20]. We test SambaLingo-Chat’s ability on Arabic, Japanese, and Turkish and then evaluate the win rate against the best open source models for those languages. We did not cherry pick the prompts or models we present in this section.

For Arabic, we compare against aya-101 [6], Jais-13b-chat [7], and Bloomchat-v1 [8]. We used the prompts from x-self-instruct-seed-32 [10] and xOA22 [11]. Sambalingo-Arabic-Chat reaches 87.96% win rate compared to Jais-13B-chat, 99.06% win rate compared to Aya101, and 68.52% compared to Bloomchat-v1.

For Japanese, we compare against ELYZA-japanese-Llama-2-7b-instruct [5]. We randomly sampled 100 prompts from the training set of aya_dataset [9]. Sambalingo-Japanese-Chat reaches a 53.5% win rate in the comparison.

For Turkish, we compare against aya-101 [6]. We used the prompts from the test set of aya_dataset [9]. Sambalingo-Turkish-Chat reaches 92.4% win rate in the comparison.

For Turkish, we compare against aya-101 [6]. We used the prompts from the test set of aya_dataset [9]. Sambalingo-Turkish-Chat reaches 92.4% win rate in the comparison.

Training Methodology

Continuous pretraining

Base Model - Llama 2

All of our models are continuously pretrained from the Llama 2 base model [12]. We run continuous pre-training for a total of 400 billion tokens across all the language experts, accelerated by SambaNova’s RDUs [24].

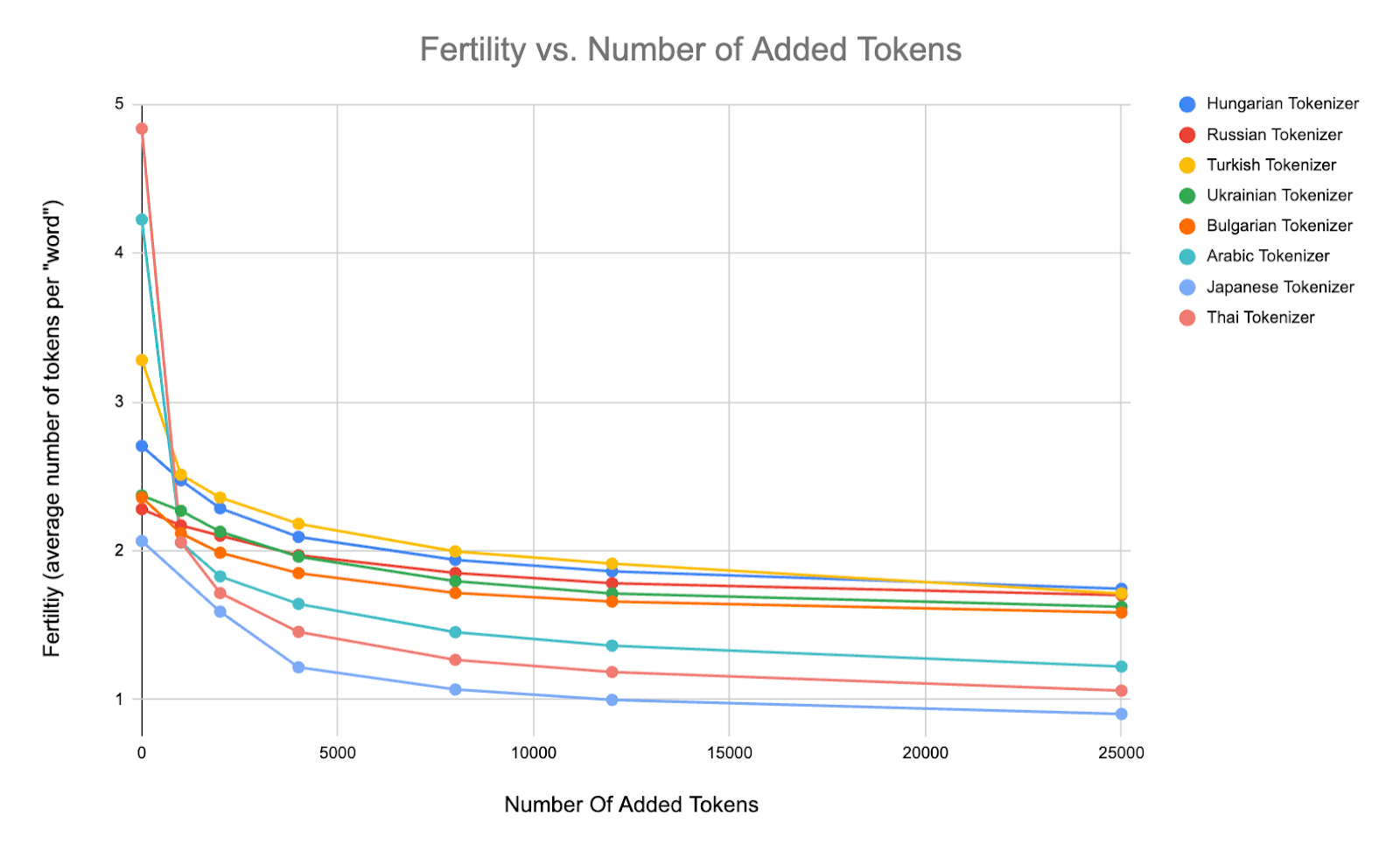

Vocabulary Extension

The Llama tokenizer is an English centric tokenizer, which means that it will not efficiently tokenize text in other languages. Previous work [25, 27] has shown that continuously pretrained models can learn newly added tokens. A tokenizer with added tokens allows more text to be packed into fewer tokens, so this gives our model improved training/inference efficiency and a longer effective sequence length. The plot below shows how the tokenizer fertility (average number of tokens per “word”) [28] improves as more tokens are added in each language. In some languages, such as Thai, it improves by as much as 4x.

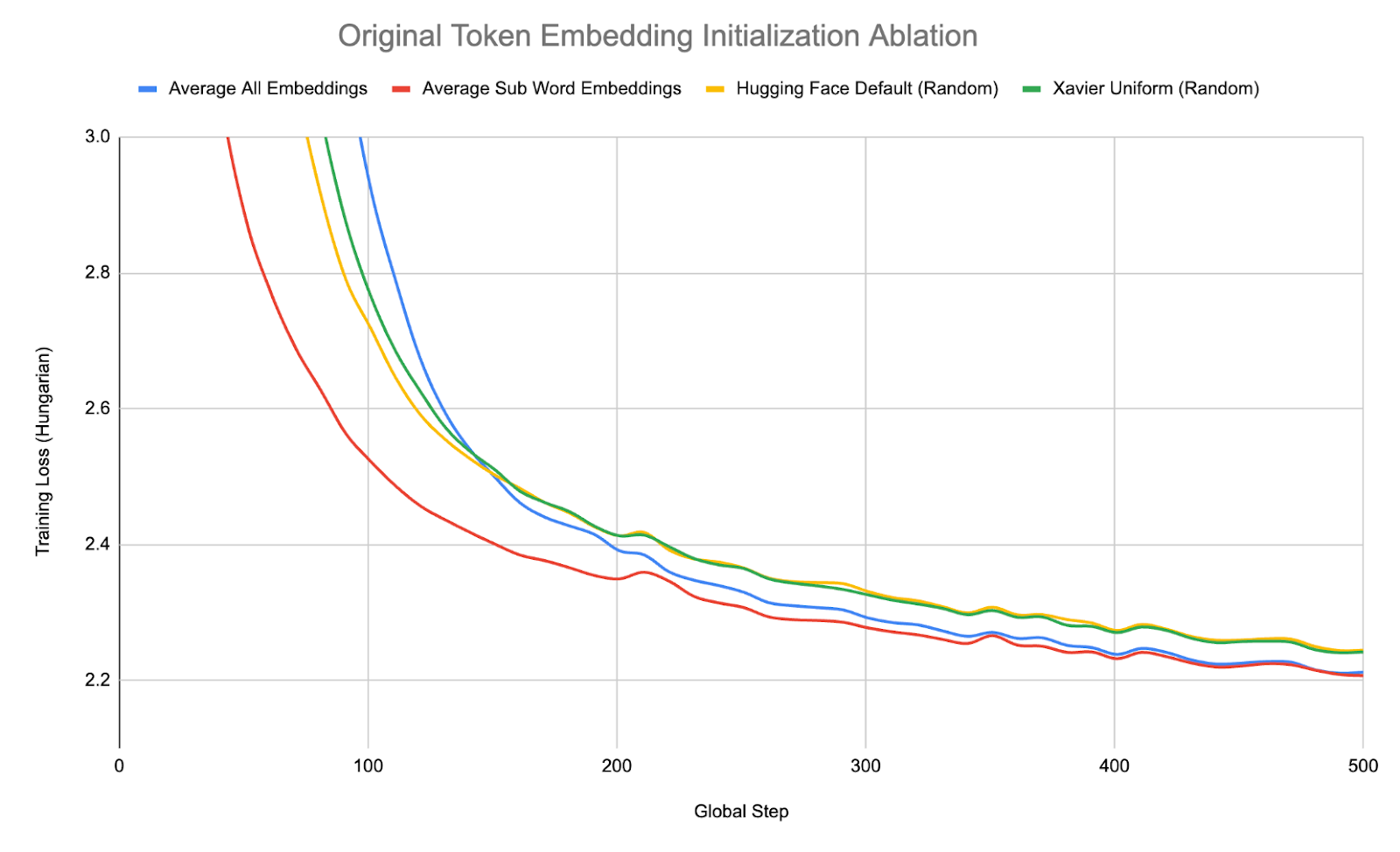

We extended the vocabulary of the base llama model from 32,000 tokens to 57,000 tokens by adding up to 25,000 non-overlapping tokens from the new language. In order to initialize the embeddings of these newly added tokens, we experiment with various initialization methods:

We extended the vocabulary of the base llama model from 32,000 tokens to 57,000 tokens by adding up to 25,000 non-overlapping tokens from the new language. In order to initialize the embeddings of these newly added tokens, we experiment with various initialization methods:

- Hugging Face default: a standard normal initialization with 0 mean, 0.02 standard deviation

- Xavier Uniform: A uniform distribution, with a range defined by the size of the new embedding [31]

- Average all: Initialize all new embeddings with the mean of all previous embeddings [52]

- Average subword: For each new token t, let L_t = [t1,...,tk] be the list of k tokens that t would have been tokenized as under the original tokenizer. Initialize the embedding E(t) with mean(E(L_t)) = mean([E_t1,...,E_tk]) [29, 30]

We run a training ablation with the above methods for 20 million tokens, and find that initializing by averaging the token sub-words has the lowest training loss, so we initialize all of our new token embeddings using this method. We further find that it helps to initialize the LM head embeddings in the same fashion, as Llama 2 does not tie its token embedding and LM head weights.

Training Details

Training Details

All pre-training is done on the cultura-X dataset [26]. We mix the data to be 75% data from the language we are adapting to, and 25% English as suggested by Csaki et al [25]. We pack the data into sequences of length 4096, and ensure that when learning a token we only attend to previous tokens in the context of the corresponding text document. We train with a global batch size of 1024, sequence length of 4096, maximum learning rate of 1e-4 with cosine decay, warmup ratio of 0.01 and a weight decay of 0.1. We train each expert for up to 4 epochs [32], but as there is a varying amount of data for each language, we do not reach 4 epochs for most training runs.

Alignment

The alignment phase follows the recipe for Zephyr-7B [1], and comprises two stages: supervised fine-tuning (SFT) and Direct Performance Optimization (DPO) [2].

The SFT phase was done on the ultrachat_200k dataset [3] mixed with the Google translated version of the ultrachat_200k dataset. It was trained for one epoch with global batch size 512 and max sequence length 2048 tokens. We used a linear decay learning rate of 2e-5 and 10% warmup.

The DPO phase was done on the ultrafeedback dataset [4] and cai-conversation-harmless dataset [50], mixed with 10% of the data Google translated. It was trained with global batch size 32 and for three epochs. We used a linear decay learning rate of 5e-7, 10% warmup and β=0.1 as the regularization factor for DPO.

Acknowledgements

We extend our heartfelt gratitude to the open-source AI community; this endeavor would not have been achievable without open source. SambaNova embraces the open-source community and aspires to actively contribute to this initiative.

We would like to give a special thanks to the following groups

- Meta for open sourcing LLama 2 and open sourcing FLORES-200 dataset

- Nguyen et al for open sourcing CulturaX dataset

- CohereAI for releasing AYA-101 and open sourcing a multilingual instruction tuning dataset

- EleutherAI for their open source evaluation framework

- Hugging Face-H4 team for open source the Zephyr training recipe and alignment handbook repo

Appendix A - Links to Hugging Face Model

https://huggingface.co/sambanovasystems/SambaLingo-Arabic-Basehttps://huggingface.co/sambanovasystems/SambaLingo-Arabic-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Bulgarian-Base

https://huggingface.co/sambanovasystems/SambaLingo-Bulgarian-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Hungarian-Base

https://huggingface.co/sambanovasystems/SambaLingo-Hungarian-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Japanese-Base

https://huggingface.co/sambanovasystems/SambaLingo-Japanese-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Russian-Base

https://huggingface.co/sambanovasystems/SambaLingo-Russian-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Slovenian-Base

https://huggingface.co/sambanovasystems/SambaLingo-Slovenian-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Serbian-Base

https://huggingface.co/sambanovasystems/SambaLingo-Serbian-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Thai-Base

https://huggingface.co/sambanovasystems/SambaLingo-Thai-Chat

https://huggingface.co/sambanovasystems/SambaLingo-Turkish-Base

https://huggingface.co/sambanovasystems/SambaLingo-Turkish-Chat

Appendix B - Example Generations

تخيل عالم فيه السفر عبر الزمن أصبح حقيقة. ماذا يمكن أن يحدث في هذا العالم وما هي المشاكل الأخلاقية التي يجب أن نأخذها في الاعتبار

(Imagine a world where time travel became a reality. What could happen in this world and what ethical problems should we take into account?)

إن عالم السفر عبر الزمن الذي أصبح حقيقة من شأنه أن يجلب العديد من الاحتمالات المثيرة، ولكنه قد يؤدي أيضًا إلى مشاكل أخلاقية كبيرة. فيما يلي بعض النتائج المحتملة:

1. تغيير الماضي:

أحد الاحتمالات هو أن يتمكن المسافرون عبر الزمن من تغيير الماضي، مما قد يكون له عواقب غير متوقعة. على سبيل المثال، إذا قام شخص ما بتغيير حدث مهم في التاريخ، فقد يؤدي ذلك إلى تغيير مسار التاريخ بأكمله.

2. المفارقات:

قد يؤدي السفر عبر الزمن أيضًا إلى ظهور مفارقات، حيث يمكن أن يؤدي إجراء واحد إلى سلسلة من ردود الفعل التي تؤدي إلى نتائج غير مقصودة. على سبيل المثال، إذا قام شخص ما بتغيير حدث مهم في التاريخ، فقد يؤدي ذلك إلى نتائج مختلفة في المستقبل، مما يؤدي إلى سلسلة من ردود الفعل التي تؤدي إلى نتائج غير مقصودة.

3. المسؤولية:

إذا أصبح السفر عبر الزمن حقيقة، فإن المسؤولية عن أفعال الماضي ستصبح قضية مهمة. على سبيل المثال، إذا قام شخص ما بتغيير حدث مهم في التاريخ، فهل سيكون مسؤولاً عن العواقب التي تلت ذلك؟

4. الأخلاق:

قد يؤدي السفر عبر الزمن أيضًا إلى مشاكل أخلاقية. على سبيل المثال، إذا تمكن شخص ما من العودة بالزمن إلى الوراء ومنع وقوع حدث مأساوي، فهل سيكون من الأخلاقي القيام بذلك؟

5. السرية:

قد يؤدي السفر عبر الزمن أيضًا إلى مشاكل تتعلق بالسرية. على سبيل المثال، إذا تمكن شخص ما من العودة بالزمن إلى الوراء ومنع وقوع حدث مأساوي، فهل سيكون من الأخلاقي الكشف عن هذه المعلومات لأجيال المستقبل؟

6. العدالة:

قد يؤدي السفر عبر الزمن أيضًا إلى مشاكل تتعلق بالعدالة. على سبيل المثال، إذا تمكن شخص ما من العودة بالزمن إلى الوراء ومنع وقوع حدث مأساوي، فهل سيكون من الأخلاقي معاقبة الشخص الذي ارتكب الجريمة؟

7. التوافق:

قد يؤدي السفر عبر الزمن أيضًا إلى مشاكل تتعلق بالتوافق. على سبيل المثال، إذا تمكن شخص ما من العودة بالزمن إلى الوراء ومنع وقوع حدث مأساوي، فهل سيكون من الأخلاقي تغيير مسار التاريخ؟

في الختام، فإن عالم السفر عبر الزمن الذي أصبح حقيقة من شأنه أن يجلب العديد من الاحتمالات المثيرة، ولكنه قد يؤدي أيضًا إلى مشاكل أخلاقية كبيرة. يجب على المسافرين عبر الزمن أن يفكروا بعناية في عواقب أفعالهم وأن يكونوا على دراية بالمسؤولية التي تأتي مع القدرة على تغيير الماضي.

في رأيك ما هي صفات المدرب الرياضي الجيد

(In your opinion, what are the characteristics of a good sports coach?)

فيما يلي بعض صفات المدرب الرياضي الجيد:

1. المعرفة والخبرة: يجب أن يكون المدرب الرياضي على دراية جيدة بالتمارين الرياضية والتغذية وعلم النفس الرياضي والإصابات. يجب أن يكون لديهم خبرة في مجال تخصصهم وأن يكونوا على اطلاع بأحدث الاتجاهات والتقنيات.

2. التواصل: يجب أن يكون المدرب الرياضي الجيد قادرًا على التواصل بشكل فعال مع العملاء، سواء كان ذلك من خلال تقديم التوجيه أو تقديم الملاحظات أو تقديم الدعم العاطفي.

3. التعاطف: يجب أن يكون المدرب الرياضي الجيد قادرًا على التعاطف مع عملائه وفهم نقاط القوة والضعف لديهم. يجب أن يكونوا قادرين على تصميم التدريبات والبرامج لتلبية الاحتياجات والأهداف الفريدة لكل عميل.

4. التحفيز: يجب أن يكون المدرب الرياضي الجيد متحمسًا للتمرين واللياقة البدنية. يجب أن يكونوا قادرين على إلهام عملائهم وتحفيزهم لتحقيق أهدافهم.

5. الصبر: يجب أن يكون المدرب الرياضي الجيد صبورًا ومتفهمًا. يجب أن يكونوا قادرين على العمل مع العملاء من جميع مستويات اللياقة البدنية، من المبتدئين إلى الرياضيين المتقدمين.

6. المرونة: يجب أن يكون المدرب الرياضي الجيد قادرًا على التكيف مع الظروف المتغيرة، مثل الإصابات أو التغييرات في الجداول الزمنية. يجب أن يكونوا قادرين على تعديل التدريبات والبرامج لتلبية الاحتياجات والأهداف الفريدة لكل عميل.

7. التعليم المستمر: يجب أن يكون المدرب الرياضي الجيد على استعداد لتعلم أشياء جديدة والبقاء على اطلاع بأحدث الأبحاث والاتجاهات في مجال اللياقة البدنية.

8. النزاهة: يجب أن يكون المدرب الرياضي الجيد صادقًا وشفافًا مع عملائه. يجب أن يكونوا قادرين على تقديم الملاحظات الصادقة وأن يكونوا مسؤولين عن أفعالهم.

9. التعاطف: يجب أن يكون المدرب الرياضي الجيد قادرًا على التعاطف مع عملائه وفهم نقاط القوة والضعف لديهم. يجب أن يكونوا قادرين على تصميم التدريبات والبرامج لتلبية الاحتياجات والأهداف الفريدة لكل عميل.

10. الاحترافية: يجب أن يكون المدرب الرياضي الجيد محترفًا في جميع الأوقات. يجب أن يكونوا قادرين على التعامل مع العملاء بطريقة احترافية، وأن يكونوا في الوقت المحدد، وأن يلتزموا بالمواعيد النهائية.

Коя е най-широко консумираната храна в света днес? Можеш ли да ми дадеш причина защо тя е най-консумираната храна?

(What is the most widely consumed food in the world today? Can you give me a reason why it is the most consumed food?)

Най-широко консумираната храна в света днес е оризът. Той е най-консумираната храна поради няколко причини:

1. Универсалност: Оризът е универсална храна, която може да се консумира като основно ястие, гарнитура или дори като лека закуска. Може да се приготви по различни начини, като варене, пържене, задушаване или печене, и може да се комбинира с различни съставки като зеленчуци, месо, морски дарове и подправки.

2. Достъпност: Оризът е една от най-достъпните зърнени храни в света. Може да се отглежда в различни климатични условия и може да се произвежда в големи количества, което го прави достъпен за хора с различни доходи.

3. Културно значение: Оризът има значително културно значение в много страни и се използва в различни ритуали, фестивали и церемонии. Например в Азия оризът се смята за символ на просперитет, изобилие и гостоприемство.

4. Енергийна плътност: Оризът е с високо съдържание на въглехидрати и осигурява значителна част от дневния прием на калории за много хора. Освен това е добър източник на основни хранителни вещества като фибри, витамини и минерали.

5. Историческо значение: Оризът има дълга история на отглеждане и консумация, датираща от хиляди години. Той е бил основна храна в много култури и е изиграл значителна роля в оформянето на човешката история и цивилизация.

Какъв извод можеш да извадиш за чувството, което изпитват героите от следната кратка история:

Мария чакаше с трепет да дойде 27-ми. Още само три дни и корабът щеше да акостира на пристанището. След 6 дълги месеца тя най-после щеше да види Иван. Когато денят най-после дойде, тя отиде на пристанището заедно с десетки други жени. Моряците един по един започнаха да слизат. Най-накрая тя съзря погледа на Иван, и двамата се прегърнаха силно.

(What can you infer about the feelings of the characters in the following short story:

Maria was anxiously waiting for the 27th to come. Just three more days and the ship would dock at the port. After 6 long months she would finally see Ivan. When the day finally came, she went to the port along with dozens of other women. One by one the sailors began to descend. Finally, she met Ivan's gaze, and they hugged each other tightly.)

Мария изпитва силно чувство на очакване и нетърпение да види Иван след шестмесечна раздяла. Тя е развълнувана и щастлива да го види отново и прегръдката им показва, че и двамата са били много развълнувани да се съберат отново.

Milyen módon járultak hozzá a magyarok a fizika területéhez?

(In what way did the Hungarians contribute to the field of physics?)

A magyarok jelentős mértékben hozzájárultak a fizika területéhez, különösen a 20. században. Íme néhány példa:

1. Albert Einstein: Einstein 1905-ben publikált relativitáselmélete forradalmasította a fizika területét, és a 20. század egyik legfontosabb tudományos felfedezésének tartják. Einstein magyarországi zsidó családban született, de Németországban nőtt fel.

2. Neumann János: Neumann János magyar matematikus és fizikus volt, akit a számítógép-tudomány atyjaként tartanak számon. Jelentős mértékben hozzájárult a kvantummechanika, a játékelmélet és a matematikai logika területéhez.

3. Teller Ede: Teller Ede magyar-amerikai atomfizikus volt, aki döntő szerepet játszott az atombomba kifejlesztésében. Később a hidrogénbomba fejlesztésében is részt vett.

4. Bay Zoltán: Bay Zoltán magyar fizikus volt, aki a radarcsillagászat területén végzett úttörő munkájáról ismert. Ő volt az első, aki radar segítségével észlelte a Holdat 1946-ban.

5. Kármán Tódor: Kármán Tódor magyar-amerikai mérnök és fizikus volt, aki a szuperszonikus repülés és a rakétatechnológia területén végzett úttörő munkájáról ismert.

6. Hevesy György: Hevesy György magyar vegyész és fizikus volt, aki a radioizotópok területén végzett munkájáért kapott Nobel-díjat.

7. Wigner Jenő: Wigner Jenő magyar-amerikai fizikus volt, aki a kvantummechanika és a szimmetria területén végzett munkájáért kapott Nobel-díjat.

Ez csak néhány példa a sok magyar közül, akik jelentős mértékben hozzájárultak a fizika területéhez.

Hol található a veszprémi vár, és mi a történelmi jelentősége?

(Where is the Veszprém castle located and what is its historical significance?)

A veszprémi vár a város szívében található, a Várhegyen, amely a Séd-patak völgyében emelkedik. A vár történelmi jelentősége a magyar történelemben és kultúrában rejlik.

A várat a 11. században alapították, és a magyar királyok rezidenciájaként szolgált. A középkorban a vár a magyar királyok és királynék fontos rezidenciája volt, és a magyar történelem számos jelentős eseményének volt tanúja.

A vár a török hódítás idején is fontos erődítmény volt, és a 16. században a törökök többször is ostromolták. A vár végül 1566-ban esett el, és a törökök elfoglalták.

A vár a 18. században a Habsburg Birodalom része lett, és a magyar nemesség és arisztokrácia fontos találkozóhelyévé vált. A vár a 19. században a magyar kultúra és művészet központjává vált, és számos híres művész és író élt és dolgozott itt.

A vár ma is népszerű turisztikai látványosság, és számos múzeumnak és kulturális intézménynek ad otthont, köztük a Laczkó Dezső Múzeumnak, a Művészetek Házának és a Bakonyi Természettudományi Múzeumnak. A vár számos történelmi épületnek és építménynek is otthont ad, köztük a Szent Mihály-székesegyház, a Szent György-kápolna és a Gizella-kápolna.

茶道用語で、茶室ではなく、屋外でお茶を点てることを何というでしょう?

(In tea ceremony terminology, what is the term for making tea outdoors instead of in the tea room?)

茶道用語で、茶室ではなく屋外でお茶を点てることを「野点(のだて)」といいます。

生涯に90回もの引っ越しをしたことでも有名な、『富嶽三十六景』で知られる浮世絵師といえば誰でしょう?

(Who is the ukiyo-e artist known for ``Thirty-Six Views of Mt. Fuji,'' who is also famous for having moved 90 times during his lifetime?)

生涯に90回もの引っ越しをしたことでも有名な、『富嶽三十六景』で知られる浮世絵師は葛飾北斎です。

Завершите параграф: Родион Раскольников - главный персонаж романа \"Преступление и наказание\" Федора Достоевского. Его характер очень сложен и эволюционирует на протяжении всей истории. Он обладает выдающимися умственными способностями и амбициями, но

(Complete the paragraph: Rodion Raskolnikov is the main character in the novel “Crime and Punishment” by Fyodor Dostoevsky. His character is very complex and evolves throughout the story. He has outstanding mental abilities and ambitions, but)

его внутренняя бор ьба и моральные сомнения мешают ему действовать в соответствии со своими желаниями. Раскольников — бедный студент, который терпит финансовые трудности и чувствует себ я бессильным изменить свою жизнь. Он приходит к теории, согласно которой некоторые люди имеют право совершать преступления ради достижения великих целей, и решает про верить свою теорию, убив старуху-процентщицу. Однако его действия приводят к глубокому чувству вины и психологическому срыву, который заставляет его искать искупления и искупления. На протяжении всего романа Раскольников проходит путь самопознания и личностного роста, осознавая важность сочувствия, сострадания и ответственности пе ред другими. В конечном итоге он принимает на себя ответственность за свои действия и находит искупление через раскаяние и прощение.

В чём заключается основная причина холодной войны?

(What is the main cause of the Cold War?)

Основная причина холодной войны заключалась в конфликте между двумя противостоящими идеологиями: капитализмом (представленным Соединенными Штатами) и коммунизмом (представленным Советским Союзом) после Второй мировой войны. Это противостояние привело к созданию двух противостоящих блоков: НАТО (Североатлантический договор) и Варшавского договора, а также к гонке вооружений, созданию я дерного оружия и распространению идеологической пропаганды. Холодная война длилась с конца Второй мировой войны до распада Советского Союза в 1991 году.

Kje so najboljši kraji za dopust v Turčiji?

(Where are the best places to vacation in Turkey?)

Turčija je čudovita država z raznoliko pokrajino in bogato kulturno dediščino. Tukaj je nekaj najboljših krajev za dopust v Turčiji:

1. Istanbul: Istanbul, nekoč Bizanc in Konstantinopel, je mesto, ki združuje vzhod in zahod. Znano je po svojih zgodovinskih znamenitostih, kot so Hagija Sofija, Modra mošeja in palača Topkapi.

2. Antalya: Antalya, znana kot \"biser Sredozemlja\", je obmorsko mesto, ki ponuja čudovite plaže, zgodovinske ruševine in živahno nočno življenje.

3. Pamukkale: Pamukkale, znan tudi kot \"bombažni grad\", je naravni čudež, sestavljen iz belih apnenčastih teras, ki se lesketajo v soncu.

4. Kapadokija: Kapadokija, ki se nahaja v osrednji Turčiji, je edinstvena in nadrealistična pokrajina, ki jo je oblikovala erozija.

5. Cappadocia: Cappadocia, ki se nahaja v osrednji Turčiji, je še ena nadrealistična pokrajina, ki jo je oblikovala erozija. Znano je po svojih podzemnih mestih, jamskih cerkvah in čudovitih sončnih zahodih.

6. Efez: Efez, ki se nahaja v zahodni Turčiji, je starodavno mesto, ki je bilo nekoč eno največjih mest v rimskem imperiju.

7. Marmaris: Marmaris, ki se nahaja na zahodni obali Turčije, je priljubljeno obmorsko letovišče, ki ponuja čudovite plaže, kristalno čisto vodo in živahno nočno življenje.

8. Bodrum: Bodrum, ki se nahaja na južni obali Turčije, je priljubljeno obmorsko letovišče, ki ponuja čudovite plaže, zgodovinske ruševine in živahno nočno življenje.

9. Izmir: Izmir, ki se nahaja na zahodni obali Turčije, je drugo največje mesto v državi. Znano je po svoji bogati kulturni dediščini, čudoviti arhitekturi in okusni kuhinji.

10. Ankara: Ankara, ki se nahaja v osrednji Turčiji, je glavno mesto države. Znano je po svoji bogati zgodovini, čudoviti arhitekturi in okusni kuhinji.

Kdaj je bil ustvarjen google? Zakaj je prevladoval nad drugimi iskalniki?

(When was google created? Why did it dominate other search engines?)

Google je bil ustanovljen septembra 1998 v garaži Larryja Pagea in Sergeya Brina v Mountain Viewu v Kaliforniji.

Google je prevladoval nad drugimi iskalniki zaradi več dejavnikov:

1. Učinkovitost: Google je bil veliko hitrejši in učinkovitejši od drugih iskalnikov, saj je zagotavljal rezultate iskanja v delčku sekunde.

2. Uporabniku prijazen vmesnik: Googlov vmesnik je bil uporabniku prijazen in enostaven za krmarjenje, kar je uporabnikom olajšalo iskanje, kar iščejo.

3. Personalizacija: Google je uporabnikom omogočil prilagajanje rezultatov iskanja z uporabo njihovih iskalnih navad, zgodovine brskanja in drugih podatkov.

4. Kakovost rezultatov iskanja: Google je zagotavljal visokokakovostne rezultate iskanja, ki so bili točni, ustrezni in brez neželene vsebine.

5. Oglaševalski model: Google je uvedel uspešen oglaševalski model, ki je podjetjem omogočil, da ciljajo na uporabnike na podlagi njihovih iskalnih poizvedb, s čimer je povečal učinkovitost njihovih oglaševalskih kampanj.

6. Strateška partnerstva: Google je sklenil strateška partnerstva z drugimi podjetji, kot so Apple, Android in YouTube, kar mu je pomagalo razširiti doseg in povečati bazo uporabnikov.

7. Inovacije: Google je bil vedno v ospredju inovacij, saj je predstavil nove funkcije in tehnologije, kot so Google Maps, Google Now in Google Assistant, ki so izboljšale uporabniško izkušnjo in povečale njegovo priljubljenost.

Od kada datiraju prva naselja na prostoru danasnjeg Beograda, i kada je grad osnovan?

(When did the first settlements on the territory of today's Belgrade date, and when was the city founded?)

Beograd su naseljavali ljudi još u praistoriji, a prva naselja datiraju iz neolitskih vremena, pre oko 7.000 godina. Međutim, grad Beograd nije osnovan upravo u to vreme, već u srednjem veku, kada su Srbi u 9. veku osnovali srednjovekovni grad Beograd, na mestu današnjeg Kalemegdana. Grad je kasnije pretrpeo razna oсвајања, razaranja i obnavljanja, da bi se na kraju razvio u značajnu evropsku metropolu.

Како направити листиће?

(What is the recipe for pastry sheets?)

Ево рецепта за прављење листића:

Састојци:

- 1 шоља вишенаменског брашна

- 1 кашичица прашка за пециво

- 1/2 кашичице соде бикарбоне

- 1/2 кашичице соли

- 1/2 шоље несланог путера, на собној температури

- 1 шоља гранулираног шећера

- 2 велика јаја

- 1 кашичица екстракта ваниле

- 1 шоља пуномасног млека

Упутства:

1. У великој посуди за мешање умутите брашно, прашак за пециво, соду бикарбону и со.

2. У посебној посуди за мешање умутите путер, шећер, јаја и екстракт ваниле док не постане глатко.

3. Постепено додајте суве састојке у мокру мешавину, мешајући док се не сједине.

4. Полако сипајте млеко, непрестано мешајући, док тесто не постане глатко и не остане суво.

5. Загрејте рерну на 375°Ф (190°Ц).

6. Разваљајте тесто на побрашњеној површини на око 1/8 инча дебљине.

7. Користите секаче за колаче или чашу да исеците листиће.

8. Ставите листиће на плех обложен папиром за печење, остављајући мало простора између сваког листића.

9. Пеците листиће 12-15 минута, или док не порумене.

10. Извадите из рерне и оставите листиће да се охладе на плеху неколико минута пре него што их пребаците на решетку да се потпуно охладе.

11. Чувајте листиће у херметички затвореној посуди на собној температури до 3 дана.

Напомена: Листови се такође могу замрзнути до 3 месеца. Да бисте их замрзнули, оставите да се потпуно охладе, а затим их умотајте у пластичну фолију и ставите у врећу за замрзавање.

ปรัชญาทางเศรษฐกิจที่พระบาทสมเด็จพระมหาภูมิพลอดุลยเดชมหาราช บรมนาถบพิตรมีพระราชดำรัสแก่ชาวไทย มีชื่อว่าอะไร

(What is the name of economic philosophy from His Majesty King Bhumibol Adulyadej The Great's speech to the Thai people?)

ปรัชญาทางเศรษฐกิจที่พระบาทสมเด็จพระปรมินทรมหาภูมิพลอดุลยเดชทรงมีพระราชดำรัสแก่ชาวไทยเรียกว่า \"ปรัชญาเศรษฐกิจพอเพียง\" ปรัชญานี้เน้นความสำคัญของการพัฒนาแบบองค์รวมแ ละความสมดุล โดยคำนึงถึงสภาพแวดล้อมทางสังคม เศรษฐกิจ และสิ่งแวดล้อม ปรัชญาเศรษฐกิจพอเพียงเน้นความสำคัญของการพึ่งพาตนเอง ความมีเหตุผล และการประหยัด ตลอดจนความสำคัญของก ารพัฒนาจิตใจและจิตวิญญาณ ปรัชญานี้เน้นย้ำถึงความสำคัญของการดำเนินชีวิตอย่างพอประมาณ มีเหตุผล และมีคุณธรรม ตลอดจนความสำคัญของการพัฒนาความรู้และทักษะอย่างต่อเนื่อง ปรัชญาเศรษฐกิ จพอเพียงได้รับการยกย่องอย่างกว้างขวางว่าเป็นแนวทางแก้ไขปัญหาการพัฒนาที่ยั่งยืนและเป็นแนวทางในการดำเนินชีวิตที่นำไปสู่ความสุขและความเป็นอยู่ที่ดี

ดอกมะลิมีกลิ่นอย่างไร

(What does jasmine smell like?)

ดอกมะลิมีกลิ่นหอมหวานและละเอียดอ่อน ซึ่งมักอธิบายว่าเป็นกลิ่นดอกไม้ที่ละเอียดอ่อนและสดชื่น มักเกี่ยวข้องกับ ความบริสุทธิ์ ความไร้เดียงสา และความรัก มักใช้ในน้ำหอม เครื่องสำอาง และผลิตภัณฑ์อื่นๆ เพื่อกลิ่นหอมที่ผ่อนคลายและผ่อนคลาย

Tİngilizce öğrenenlere tavsiyen nedir?

(What is your advice to English language learners??)

İngilizce öğrenenlere yardımcı olmak için bazı genel öneriler ve ipuçları sunabilirim:

1. Düzenli olarak pratik yapın: İngilizce öğrenmek, düzenli ve tutarlı pratik yapmayı gerektirir. Her gün veya haftada belirli bir zaman ayırın ve bu zamanı dil becerilerinizi geliştirmeye adayın.

2. Dinleyin ve konuşun: İngilizce dinleme ve konuşma becerilerinizi geliştirmek için İngilizce müzik, podcast'ler, TV şovları ve filmler dinleyin. Anadili İngilizce olan kişilerle konuşma pratiği yapın ve kendinizi rahat hissettiğinizden daha yavaş konuşun.

3. Kelime dağarcığınızı genişletin: Kelime bilginizi geliştirmek için kelime oyunları, kelime listeleri ve kelime alıştırmaları gibi çeşitli kaynakları kullanın.

4. Gramer kurallarını öğrenin: İngilizce dilbilgisi kurallarını öğrenmek, İngilizce konuşurken daha doğru ve kendinden emin hissetmenize yardımcı olabilir.

5. Telaffuzunuzu geliştirin: Telaffuzunuzu geliştirmek için İngilizce konuşurken yavaşlayın ve telaffuzunuzu kaydedin. Telaffuzunuzu geliştirmek için çevrimiçi kaynakları, dil öğrenme uygulamalarını ve anadili İngilizce olan kişilerle konuşmayı kullanın.

6. Hata yapmaktan korkmayın: Hata yapmak öğrenme sürecinin doğal bir parçasıdır. Hata yapmaktan korkmayın ve bunun yerine bunları öğrenme ve gelişme fırsatları olarak kullanın.

7. Anlamayı geliştirmek için okuyun: Okumak, İngilizce anlama ve kelime dağarcığınızı geliştirmenin harika bir yoludur. İlginizi çeken kitapları, makaleleri ve blogları okuyun.

8. Kendinizi zorlayın: Kendinizi zorlamak ve dil becerilerinizi geliştirmek için yeni kelimeler, dil bilgisi kuralları ve cümle yapıları öğrenmeye çalışın.

9. Hedefleri belirleyin: Dil öğrenme hedeflerinizi belirleyin ve ilerlemenizi takip edin. Bu, motive olmanıza ve dil öğrenme yolculuğunuzda ilerleme kaydetmenize yardımcı olabilir.

10. Sabırlı olun: İngilizce öğrenmek zaman ve çaba gerektirir. Sabırlı olun ve dil öğrenme yolculuğunuzda kendinize karşı nazik olun.

Tatilde Antalya'ya gitmeyi planlıyorum, neleri gezebilirim?

(I am planning to go to Antalya on holiday, what can I visit?)

Antalya, Türkiye'nin Akdeniz kıyısında yer alan güzel bir şehirdir ve ziyaretçilere çok sayıda turistik mekan ve aktivite sunmaktadır. İşte Antalya'da gezilecek yerler için bazı öneriler:

1. Kaleiçi: Bu tarihi bölge, dar sokakları, renkli evleri ve hareketli kafeleriyle Antalya'nın en popüler turistik mekanlarından biridir.

2. Antalya Arkeoloji Müzesi: Bu müze, Antalya ve çevresinden çok sayıda antik eser ve kalıntıya ev sahipliği yapmaktadır.

3. Antalya Yat Limanı: Bu hareketli liman, yürüyüş yapmak, yemek yemek ve alışveriş yapmak için harika bir mekandır.

4. Düden Şelaleleri: Bu güzel şelaleler, Antalya'nın hemen dışında yer alır ve yürüyüş yapmak, piknik yapmak veya sadece doğal güzelliğin tadını çıkarmak için harika bir yerdir.

5. Olimpos Antik Kenti: Bu antik kent, muhteşem bir plajın yakınında yer alır ve antik kalıntıları ve doğal çevresiyle tanınır.

6. Aspendos Antik Tiyatrosu: Bu muhteşem antik tiyatro, Antalya'nın yaklaşık bir saat dışında yer alır ve tarih ve kültür meraklıları için mutlaka görülmesi gereken bir yerdir.

7. Konyaaltı Plajı: Bu güzel plaj, Antalya'nın hemen dışında yer alır ve yüzmek, güneşlenmek ve dinlenmek için harika bir mekandır.

8. Antalya Teleferik: Bu teleferik, Antalya'nın muhteşem manzarasını sunar ve şehrin ve çevredeki dağların muhteşem manzarasını görmek için harika bir yoldur.

9. Antalya Hayvanat Bahçesi: Bu hayvanat bahçesi, çok sayıda egzotik hayvan ve bitkiye ev sahipliği yapar ve ailenizle vakit geçirmek için harika bir yerdir.

10. Antalya Müzesi: Bu müze, Antalya ve çevresinden çok sayıda antik eser ve kalıntıya ev sahipliği yapmaktadır.

Bunlar Antalya'da gezilecek birçok turistik mekandan sadece birkaçı. Ziyaretiniz sırasında şehrin zengin tarihini ve doğal güzelliğini keşfederek harika vakit geçireceğinizden emin olabilirsiniz.

Citations

[1] https://arxiv.org/pdf/2310.16944.pdf[2] https://arxiv.org/abs/2305.18290

[3] https://huggingface.co/datasets/HuggingFaceH4/ultrachat_200k

[4] https://huggingface.co/datasets/HuggingFaceH4/ultrafeedback_binarized

[5] https://huggingface.co/elyza/ELYZA-japanese-Llama-2-7b-instruct

[6] https://huggingface.co/CohereForAI/aya-101

[7] https://huggingface.co/core42/jais-13b-chat

[8] https://huggingface.co/sambanovasystems/BLOOMChat-176B-v1

[9] https://huggingface.co/datasets/CohereForAI/aya_dataset

[10] https://huggingface.co/datasets/sambanovasystems/x-self-instruct-seed-32

[11] https://huggingface.co/datasets/sambanovasystems/xOA22

[12] https://arxiv.org/pdf/2307.09288.pdf

[13] https://github.com/EleutherAI/lm-evaluation-harness

[14] https://arxiv.org/pdf/2304.04675.pdf

[15] https://github.com/facebookresearch/flores/blob/main/flores200/README.md

[16] https://arxiv.org/abs/2308.16884

[17] https://github.com/facebookresearch/belebele

[18] https://arxiv.org/pdf/2401.13303.pdf

[19] https://github.com/lm-sys/FastChat/tree/main/fastchat/llm_judge

[20] https://arxiv.org/abs/2306.05685

[21] https://huggingface.co/datasets/wikimedia/wikipedia

[22] https://huggingface.co/datasets/mc4

[23] https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

[24] https://sambanova.ai/hubfs/23945802/SambaNova_Accelerated-Computing-with-a-Reconfigurable-Dataflow-Architecture_Whitepaper_English-1.pdf

[25] https://arxiv.org/abs/2311.05741

[26] https://arxiv.org/pdf/2309.09400.pdf

[27] https://arxiv.org/pdf/2312.02598.pdf

[28] https://juditacs.github.io/2019/02/19/bert-tokenization-stats.html

[29] https://arxiv.org/pdf/2311.00176.pdf

[30] https://aclanthology.org/2021.emnlp-main.833.pdf

[31] https://pytorch.org/docs/stable/nn.init.html#torch.nn.init.xavier_uniform_

[32] https://arxiv.org/pdf/2305.16264.pdf

[33] https://huggingface.co/elyza/ELYZA-japanese-Llama-2-7b

[34] https://huggingface.co/scb10x/typhoon-7b

[35] https://huggingface.co/NYTK/PULI-GPTrio

[36] https://huggingface.co/core42/jais-13b

[37] https://huggingface.co/IlyaGusev/saiga_mistral_7b_merged

[38] https://huggingface.co/boun-tabi-LMG/TURNA

[39] https://huggingface.co/ai-forever/mGPT-1.3B-bulgarian

[40] https://huggingface.co/macedonizer/sr-gpt2

[41] https://huggingface.co/macedonizer/sl-gpt2

[42] https://huggingface.co/docs/transformers/model_doc/bloom

[43] https://arxiv.org/abs/2112.10668

[44] https://huggingface.co/facebook/xglm-7.5B

[45] https://huggingface.co/bigscience/bloom-7b1

[46] https://huggingface.co/ai-forever/mGPT-13B

[47] https://arxiv.org/abs/1809.05053

[48] https://aclanthology.org/2020.emnlp-main.185.pdf

[49] https://github.com/yandex-research/crosslingual_winograd

[50] https://huggingface.co/datasets/HuggingFaceH4/cai-conversation-harmless

[51] https://arxiv.org/abs/1908.11828

[52] https://nlp.stanford.edu/~johnhew/vocab-expansion.html