Hume AI delivers instant speech processing with SambaCloud

SambaNova and Hume AI Unleash Lightning-Fast, Multilingual Speech-Language Model to Redefine Conversational AI for Global Enterprises

Alan Cowen, CEO of Hume AI, shares their vision for end-to-end speech LLMs and the importance of latency on delivering realistic outcomes. He expands on the challenges faced by the industy today, as well as potential applications and trust concerns of speech AI in the future.

“SambaCloud enables LLMs to be run more efficiently because the speech can be decoded faster as the model gains more predictive capabilities, resulting in larger batches at less cost.”

— Alan Cowen, CEO Hume AI

Synthesizing the human voice with AI

CASE STUDY

Realistic voice AI real-time

Text-to-speech and speech-to-speech APIs with response times on the order of 100 ms to 300 ms, Hume AI marries hyperrealistic quality with human-like conversation latency.

Learn more →

VIDEO

Meeting the needs of voice AI

Scalability, cost, and latency are required for quality voice AI. Enterprises also appreciate private deployments that SambaNova offers.

DEMO

Hume LLM using DeepSeek R1 on SambaCloud

The EVI 3 API creates the most realistic voice AI interactions with ultrafast inference enables optimizations real-time.

EXPLORE

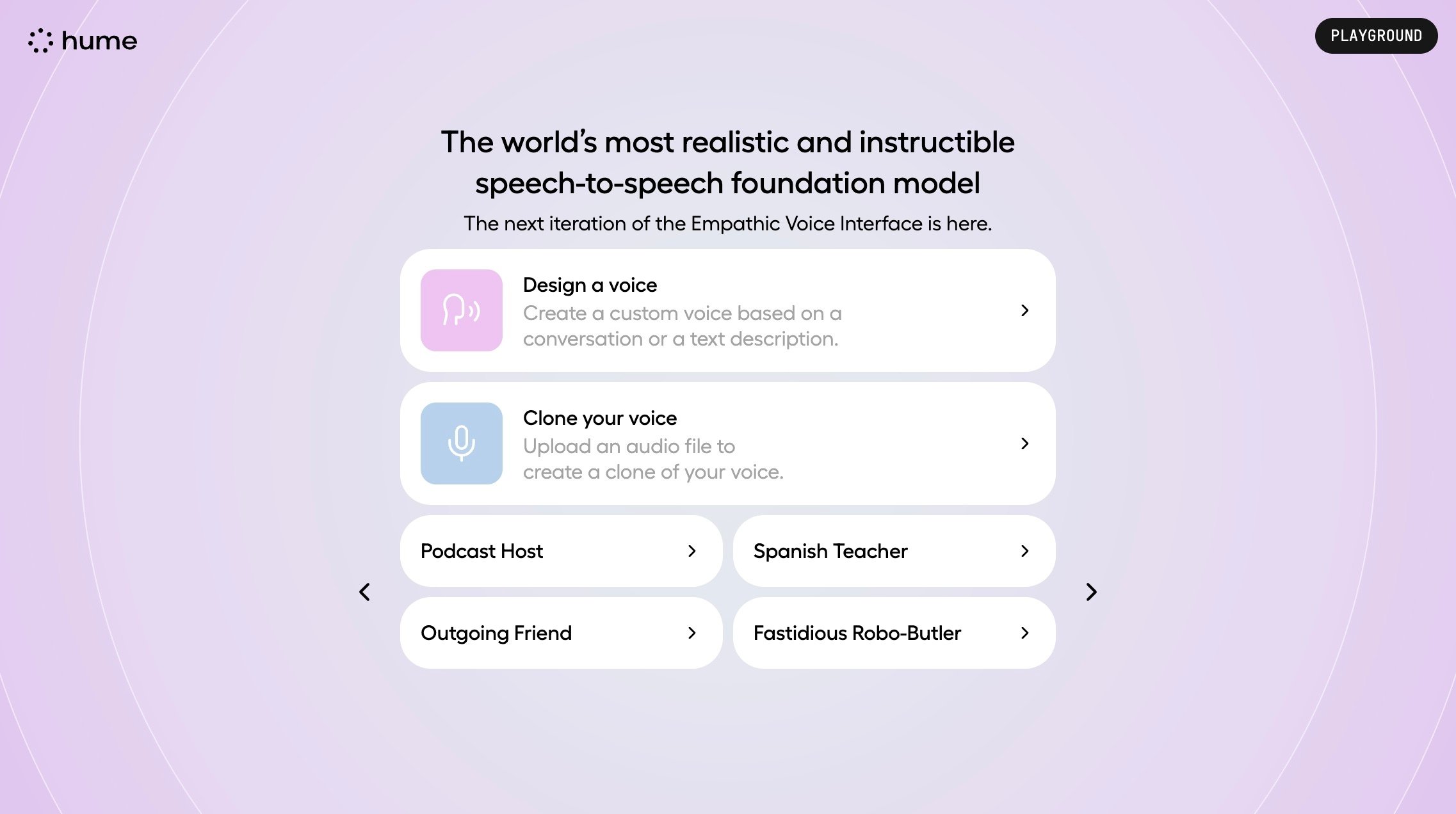

The Hume Playground

Powered by SambaNova, Hume offers the world's most realistic and instructible speech-to-speech foundation model.

Find out how SambaNova can uplevel your models.

Join SambaCloud today!

SambaNova customers push the limits of AI