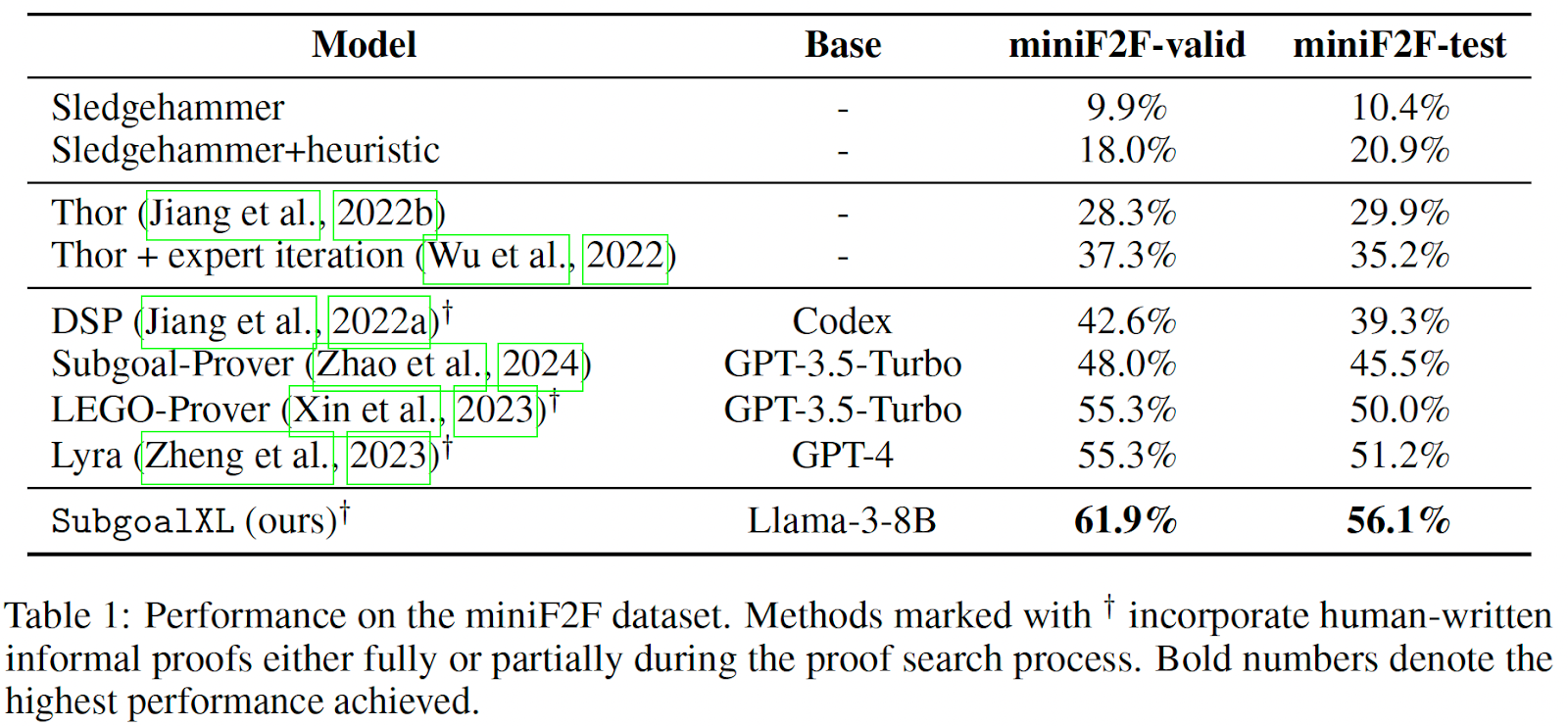

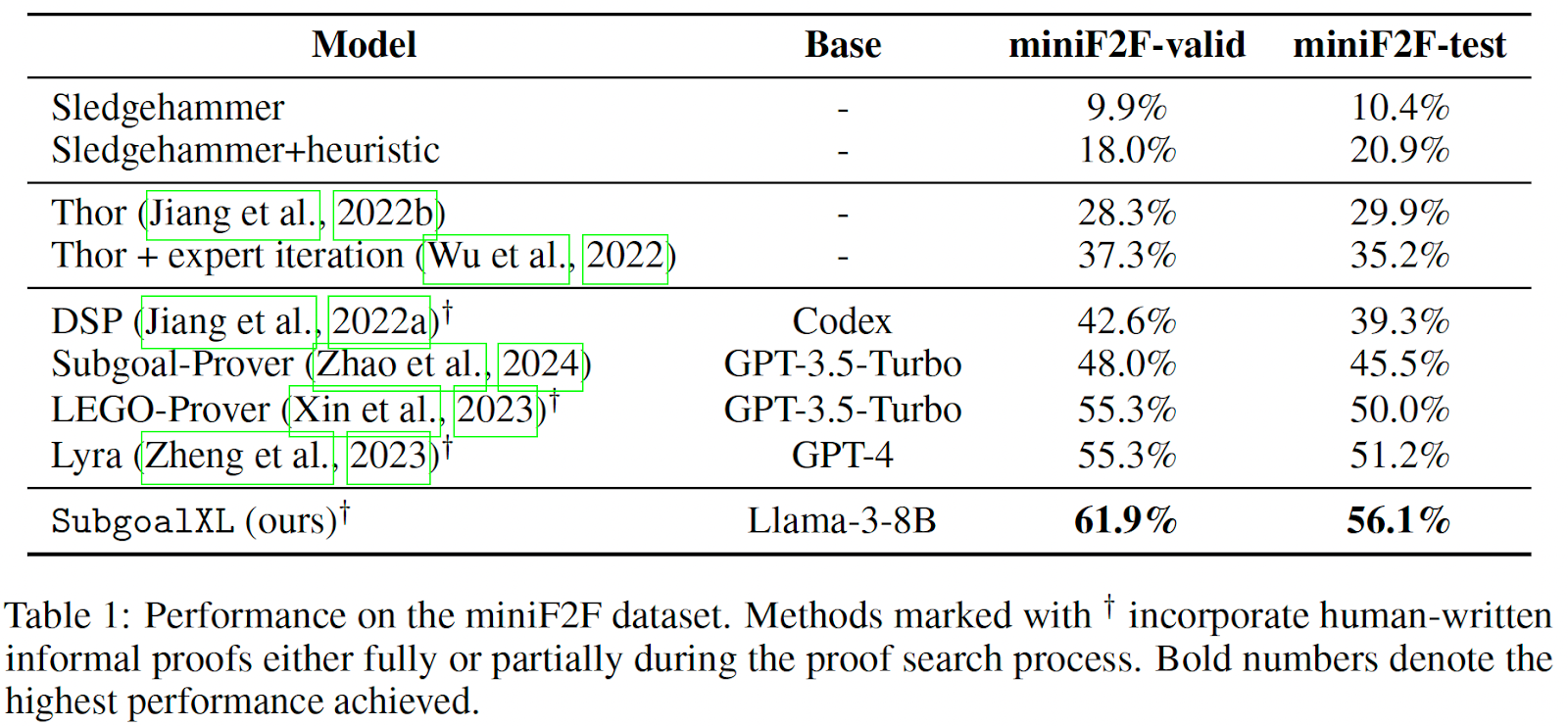

SubgoalXL: Pushing the Boundaries of LLM in Formal Theorem Proving

SubgoalXL: Pushing the Boundaries of LLM in Formal Theorem Proving

September 3, 2024

Does reduced precision hurt? A bit about losing bits.

Does reduced precision hurt? A bit about losing bits.

June 20, 2024

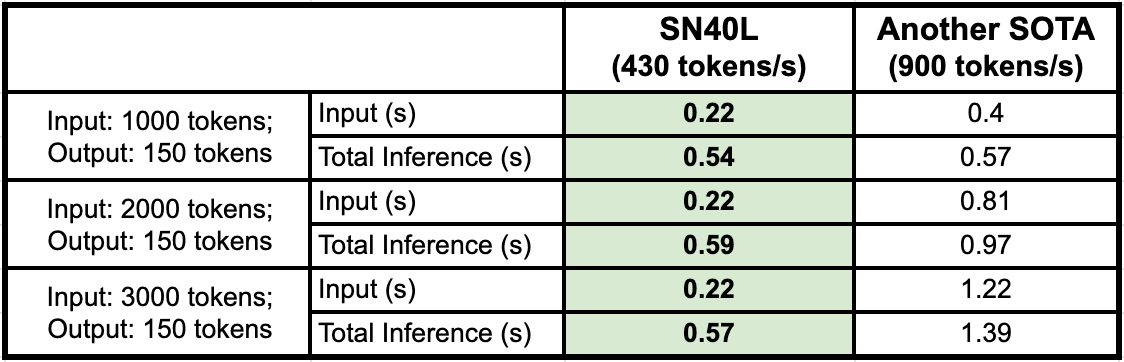

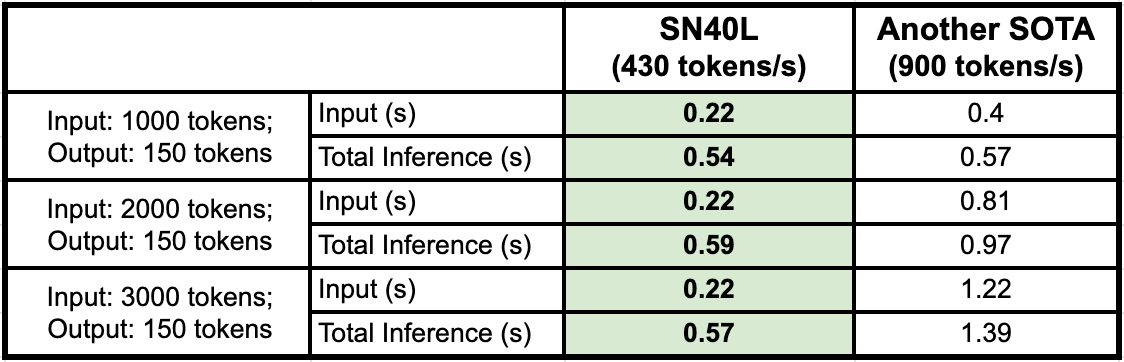

Tokens Per Second is Not All You Need

Tokens Per Second is Not All You Need

May 1, 2024

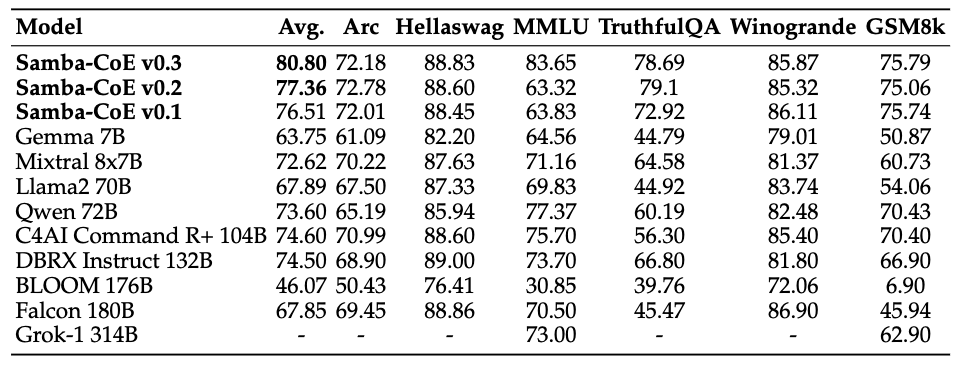

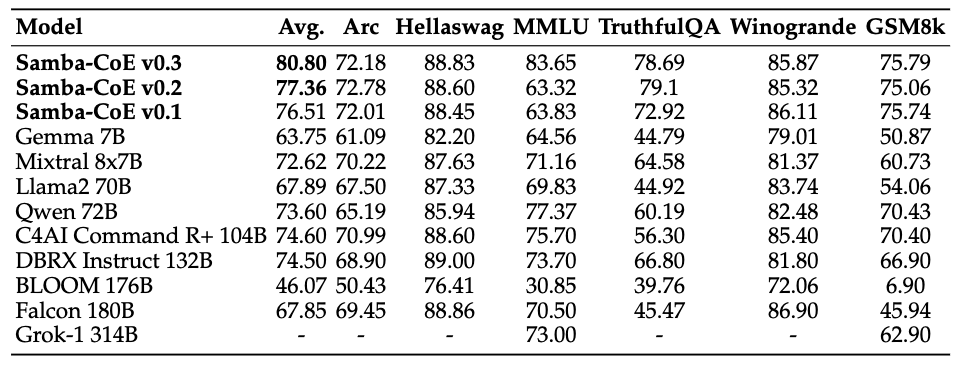

Samba-CoE v0.3: The Power of Routing ML Models at Scale

Samba-CoE v0.3: The Power of Routing ML Models at Scale

April 11, 2024

SambaLingo hits 15,000+ downloads, now integrated with Samba-CoE-v0.2

SambaLingo hits 15,000+ downloads, now integrated with Samba-CoE-v0.2

April 8, 2024

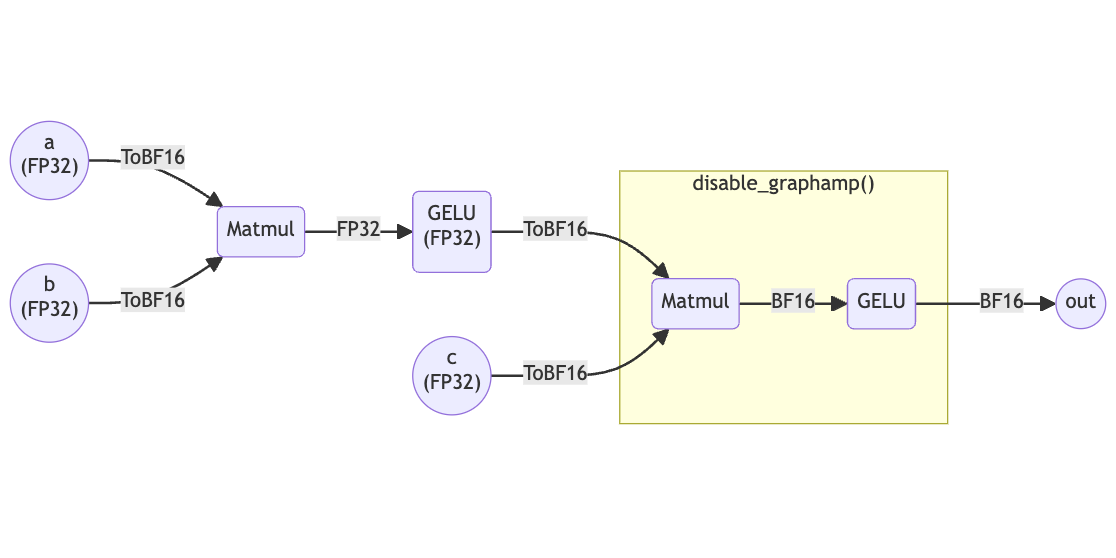

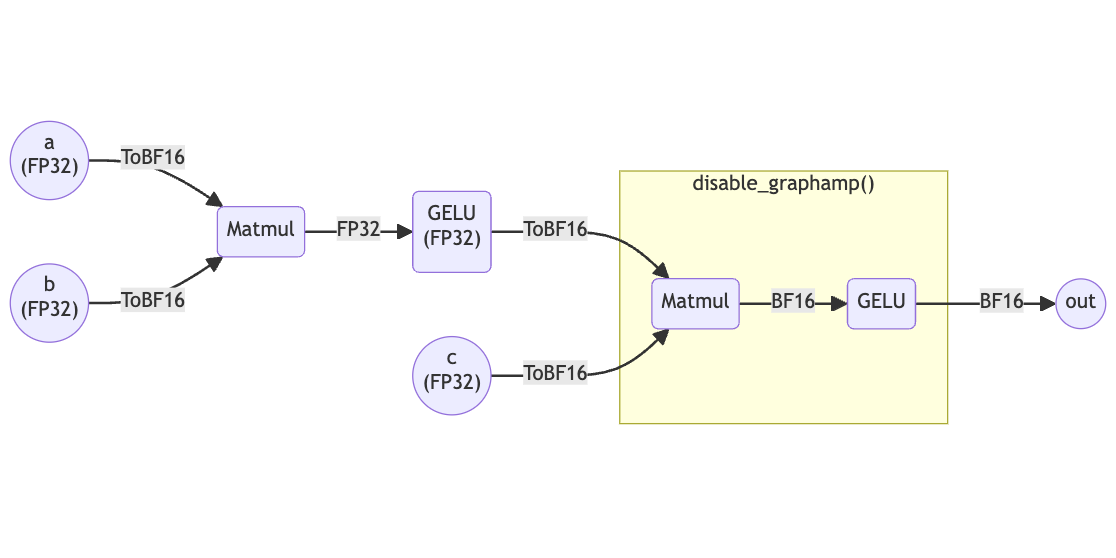

Using Mixed Precision on RDUs

Using Mixed Precision on RDUs

March 21, 2024

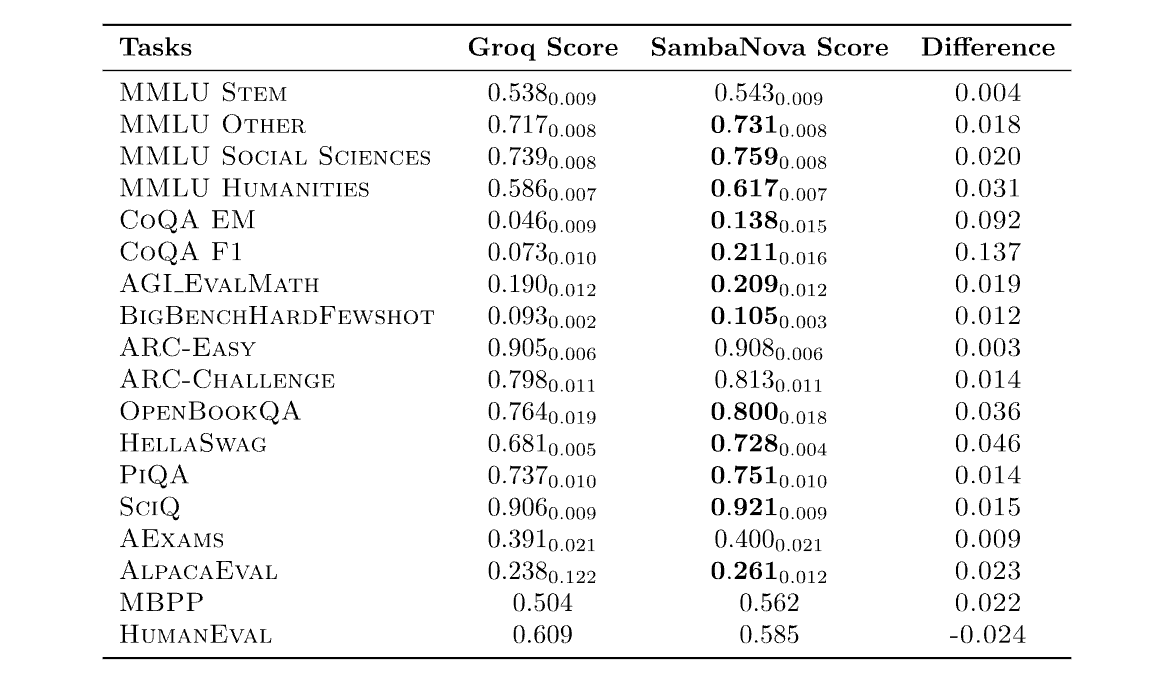

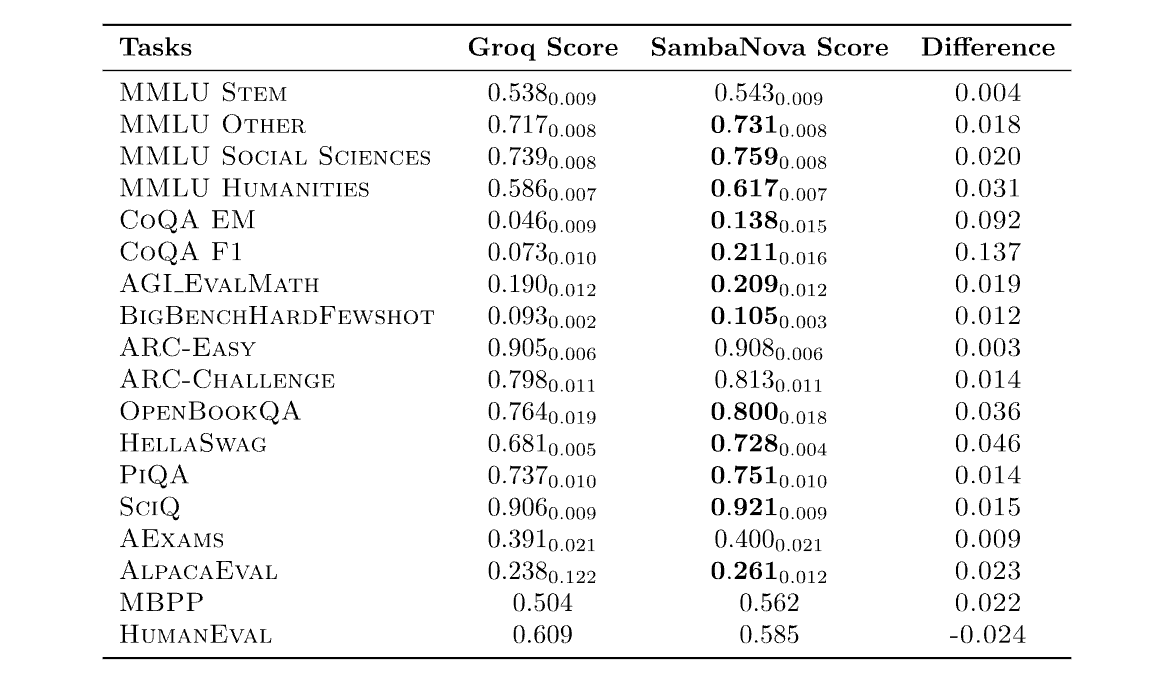

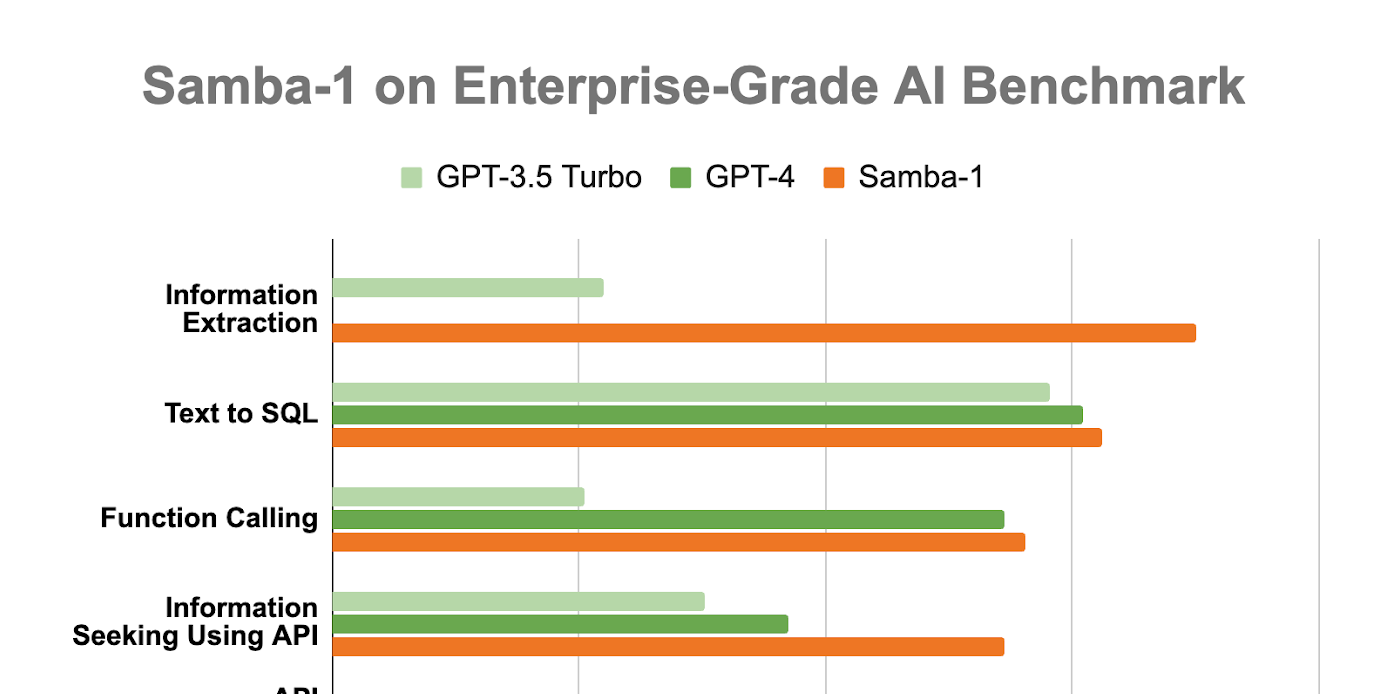

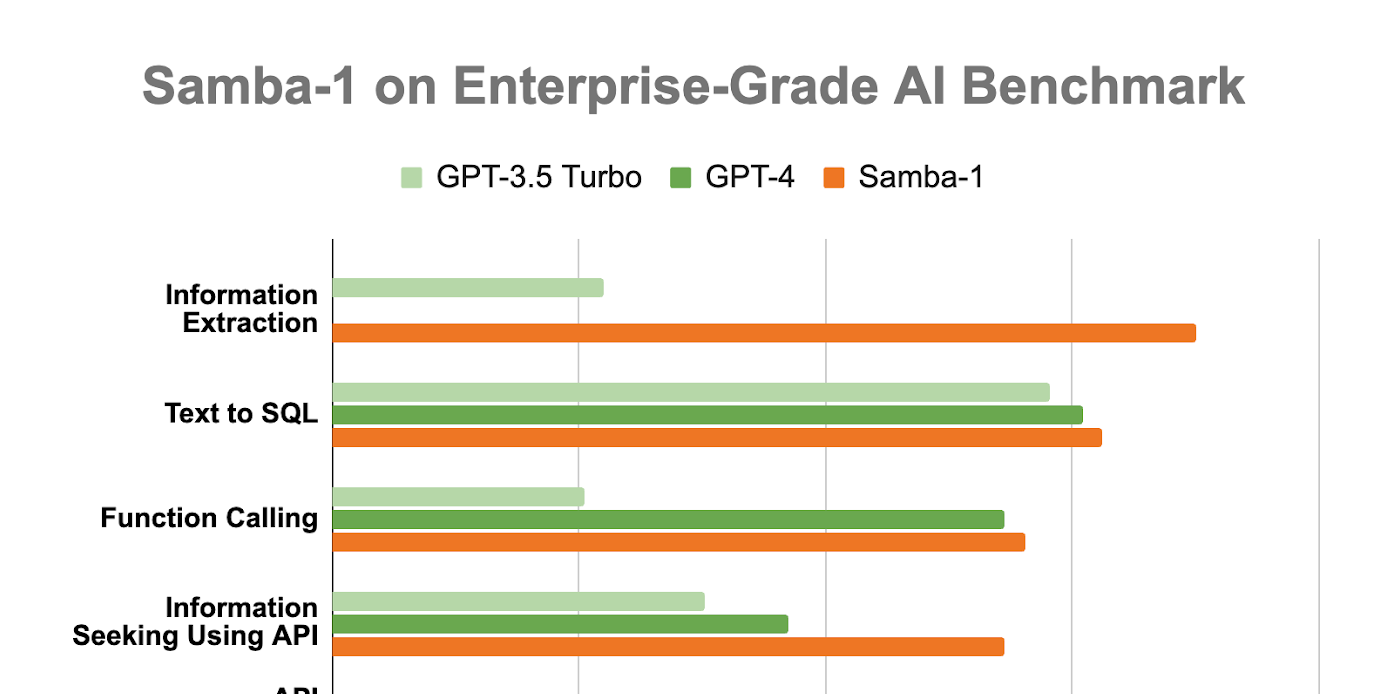

Benchmarking Samba-1

Benchmarking Samba-1

February 28, 2024

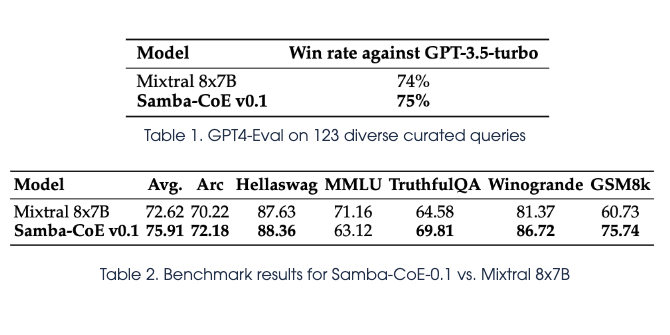

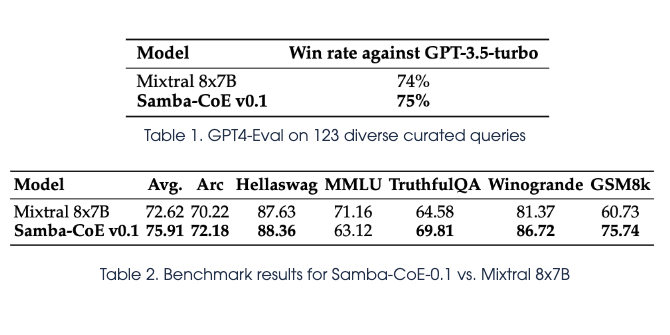

Samba-CoE v0.1 - Unlocking the power of routing to build a Composition of Experts

Samba-CoE v0.1 - Unlocking the power of routing to build a Composition of Experts

February 28, 2024

SambaLingo - Open Source Language Experts

SambaLingo - Open Source Language Experts

February 26, 2024

Introducing the SambaCoder-nsql-Llama-2-70B model

Introducing the SambaCoder-nsql-Llama-2-70B model

February 13, 2024

BLOOMChat-v2 Long Sequences at 176B

BLOOMChat-v2 Long Sequences at 176B

February 6, 2024

Fault management and RDA systems: Part 2

Fault management and RDA systems: Part 2

January 25, 2024

Fault management and RDA systems: Part 1

Fault management and RDA systems: Part 1

January 25, 2024

ALiBi Deep Dive: Interpolation and Precision

ALiBi Deep Dive: Interpolation and Precision

December 22, 2023

Elevating Information Retrieval and Augmenting Large Language Models

Elevating Information Retrieval and Augmenting Large Language Models

November 21, 2023

Enabling Open Source LLMs to Become Effective Tool Manipulators

Enabling Open Source LLMs to Become Effective Tool Manipulators

September 8, 2023

Training long sequence size models with SambaNova

Training long sequence size models with SambaNova

August 7, 2023

BLOOMChat: a New Open Multilingual Chat LLM

BLOOMChat: a New Open Multilingual Chat LLM

May 19, 2023

Domain Adapted Automated Speech Recognition

Domain Adapted Automated Speech Recognition

March 23, 2023

Achieving GPT 175B Level Accuracy with a 10x More Efficient Model

Achieving GPT 175B Level Accuracy with a 10x More Efficient Model

February 13, 2023

Dataflow Architecture Leads to a Performance Breakthrough on GNN Fused Kernels

Dataflow Architecture Leads to a Performance Breakthrough on GNN Fused Kernels

December 16, 2022

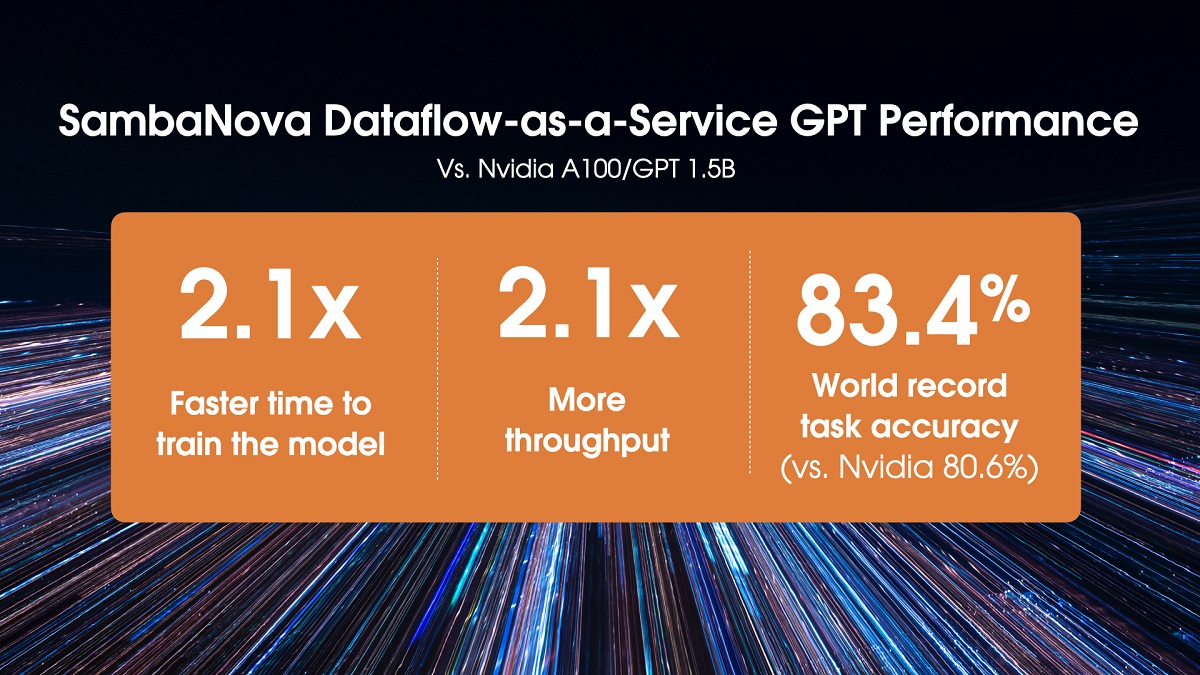

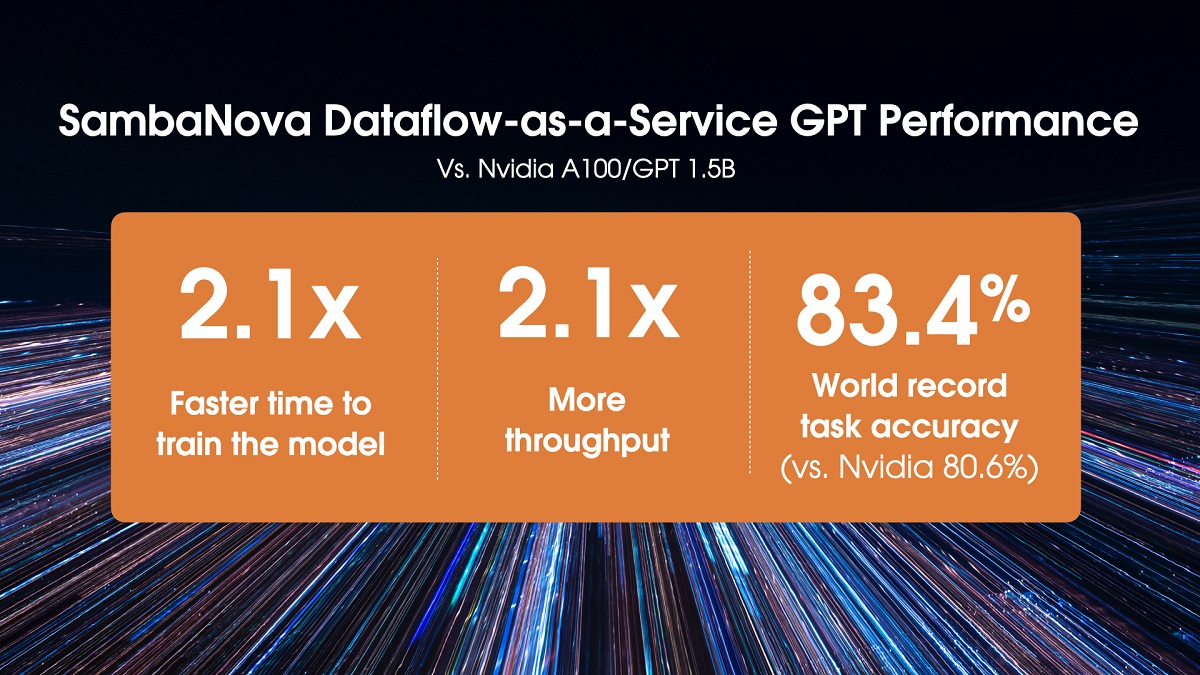

World Record Large Language Models Training Performance with SambaNova Systems Dataflow-as-a-ServiceTM GPT and Why It Doesn’t Matter

World Record Large Language Models Training Performance with SambaNova Systems Dataflow-as-a-ServiceTM GPT and Why It Doesn’t Matter

February 1, 2022

Explaining Explainability in AI

Explaining Explainability in AI

January 21, 2022

Accelerating Scientific Applications With SambaNova Reconfigurable Dataflow Architecture

Accelerating Scientific Applications With SambaNova Reconfigurable Dataflow Architecture

April 26, 2021