Products

SambaNova’s AI platform is the technology backbone for the next decade of AI innovation. Customers are turning to SambaNova to quickly deploy state-of-the-art AI and deep learning capabilities that help them outcompete their peers.

The only, enterprise grade full stack platform, purpose built for generative AI

The platform for generative AI development and innovation

Use Cases

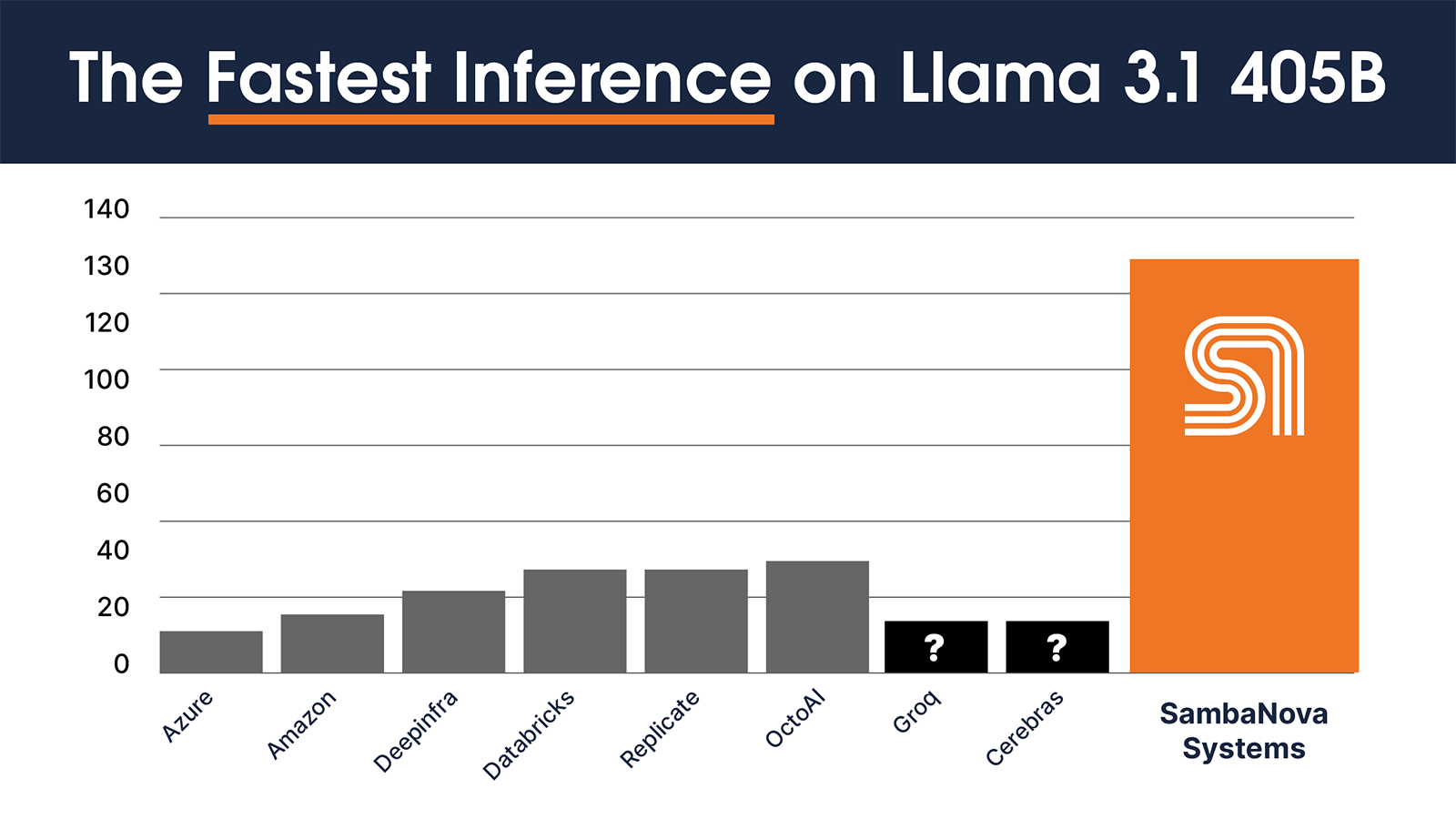

Supercharge your AI-powered applications across Llama 3 8B, 70B, & 405B models.

For free.

For free.

Technology

SambaNova delivers the only enterprise-grade full stack platform, from chips to models, purpose built for generative AI

Power the most demanding generative and agentic AI workloads with the most performant & capable processor, purpose-built for AI

Easily run your custom models, accelerate discovery, & power the next generation of high performance computing

Resources

Find what you need to accelerate your AI journey.

About

WHO WE ARE

Customers are turning to SambaNova to quickly deploy state-of-the-art AI capabilities to gain competitive advantage. Our purpose-built enterprise-scale AI platform is the technology backbone for the next generation of AI computing. We power the foundation models that unlock the valuable business insights trapped in data.

-

ProductsSambaNova’s AI platform is the technology backbone for the next decade of AI innovation. Customers are turning to SambaNova to quickly deploy state-of-the-art AI and deep learning capabilities that help them outcompete their peers.The only, enterprise grade full stack platform, purpose built for generative AIThe platform for generative AI development and innovationSupercharge your AI-powered applications across Llama 3 8B, 70B, & 405B models. For free.

-

TechnologySambaNova delivers the only enterprise-grade full stack platform, from chips to models, purpose built for generative AIPower the most demanding generative and agentic AI workloads with the most performant & capable processor, purpose-built for AIEasily run your custom models, accelerate discovery, & power the next generation of high performance computing

-

ResourcesFind what you need to accelerate your AI journey.

-

AboutWHO WE ARECustomers are turning to SambaNova to quickly deploy state-of-the-art AI capabilities to gain competitive advantage. Our purpose-built enterprise-scale AI platform is the technology backbone for the next generation of AI computing. We power the foundation models that unlock the valuable business insights trapped in data.

- Support