SambaRack

Hardware built for the data centers of today and tomorrow

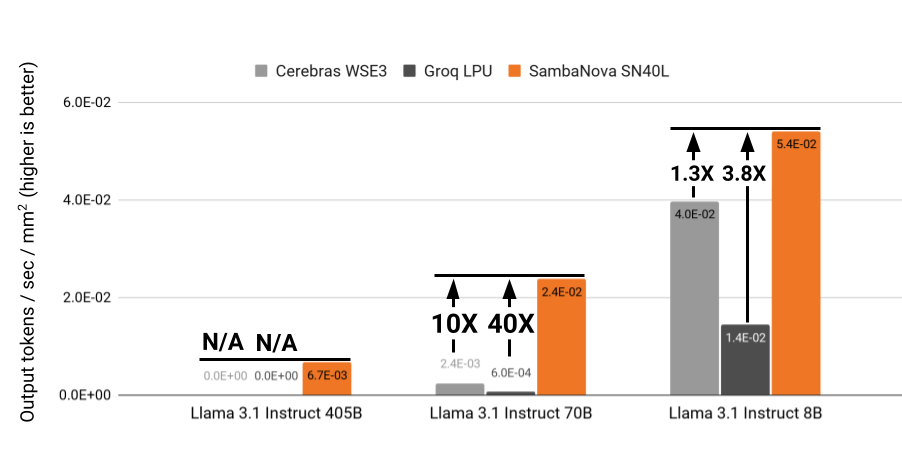

The most efficient token generation factory

SambaRack generates the most tokens per watt across any AI model. Maximize the output of your existing data center footprint and energy as AI demand continues to grow exponentially.

The advantages of SambaRack

Fast inference on the best models

SambaRack delivers industry-leading performance and accuracy across the largest and best models with the highest accuracy, all benchmarked by Artificial Analysis.

Learn more -->Seamless turnkey deployments

A turnkey data center solution, SambaRack integrates hardware, networking, and software into a single, self-contained system. With racks readily available in North America, systems can be installed in a matter of months — getting you up and running quickly.

Learn more -->

Efficiency at the core

At the heart of SambaRack are 16 SN40L RDU chips, which leverage the groundbreaking DataFlow architecture to deliver unparalleled performance and efficiency for fast inference of massive AI models.

Learn more -->Secure, fast, flexible

Industries worldwide tap into the SambaRack performance advantage.

Developers & Enterprise

Powers leading businesses with private, plug-and-play, and fast AI.

Learn more → Developers & EnterpriseGovernment & Public Sector

Gain secure, flexible, and fast AI inference for all nations.

Learn more → Government & Public SectorData Centers

Unlock new revenue streams by deploying AI in existing infrastructure.

Learn more → Data CentersRelated resources