Generative AI has the promise to significantly improve many business processes across enterprises. Nearly all enterprises have conducted proofs of concept with many of the models available today, but the primary concern implementing these solutions is security and reliability. Improving the security of generative AI solutions can be achieved by leveraging the power and flexibility of open-source AI models.

“At LatticeFlow AI, our risk assessments have demonstrated this repeatedly, enabling enterprises to deploy open models securely and with confidence.”

Dr. Petar Tsankov

CEO and co-founder, LatticeFlow AI

Open-source models allow enterprises to securely integrate their data on-premises with solutions. When data is kept on-premises, sensitive data can be secured without the risk of leaking to third-party vendors. This approach also offers enterprises the flexibility and customization they need in their production environment. The challenge with open-source models is that more customization is required to safeguard against traditional security threats, such as prompt injection, data manipulation, and adversarial exploits.

“The perception that open AI models aren’t enterprise-ready is a myth. With the right guardrails, open models can match — or even outperform — closed models from providers like OpenAI and Anthropic. At LatticeFlow AI, our risk assessments have demonstrated this repeatedly, enabling enterprises to deploy open models securely and with confidence,” commented Dr. Petar Tsankov, CEO and co-founder of LatticeFlow AI.

“The perception that open AI models aren’t enterprise-ready is a myth. With the right guardrails, open models can match — or even outperform — closed models from providers like OpenAI and Anthropic. At LatticeFlow AI, our risk assessments have demonstrated this repeatedly, enabling enterprises to deploy open models securely and with confidence,” commented Dr. Petar Tsankov, CEO and co-founder of LatticeFlow AI.

In collaboration with LatticeFlow AI, SambaNova has validated a better approach to avoid security challenges when deploying models — and benchmarked the results. Just announced, open models equipped with targeted guardrails achieve near-flawless security (up to 99.6%) while maintaining >98% service quality. No longer theoretical — it has been tested and proven across rigorous enterprise attack simulations.

Why every enterprise should care

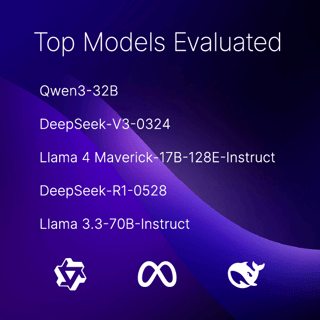

SambaNova partnered with LatticeFlow AI to evaluate the top 5 models used by developers and enterprises on SambaCloud and ran security evaluations comparing just the base model against when it was paired with a guardrail model.

What we uncovered:

- Guardrails enhance — not hinder — performance. All models retained >98% usability, which is critical for customer-facing applications

- Top open-source models, like Llama-3.3-70B and DeepSeek-R1, saw security scores surge from as low as 1.8% up to 99.6% when shielded by purpose-built guardrails.

- When deployed with guardrails, open-source models reach — if not exceed — the security of closed source models.

LatticeFlow AI released complimentary risk reports for enterprises to use. You can find links to all of them in our supported models section of SambaNova Documentation. While regulated sectors, such as finance, must deploy secure solutions for compliance reasons, every enterprise should embrace security for brand trust, IP protection, and operational resilience.

SambaNova's commitment to security

We embed security into the deployment lifecycle. Whether enterprises are using SambaCloud or SambaStack to deploy their AI inference, the best performance is unlocked with our SambaRack hardware in the most secure manner.

SambaCloud never sees or collects any of your data or user prompts ensuring full data privacy. SambaCloud is also SOC 2 Type 2 compliant and certified. Our full list of certifications are available in our Trust Center.

At SambaNova, we believe strongly that performance and security go hand-in-hand. We are committed to delivering fast and efficient AI inference, powered by SambaRack, in the most secure manner and helping enterprises and governments succeed in deploying their solutions to production.