DeepSeek recently dropped yet another update to their V3 model architecture: DeepSeek-V3.1-Terminus! According to Artificial Analysis, this model is now one of the best open source reasoning models and SambaNova is running this fastest in the world over 200 tokens/second with the fastest time to first token! Just like the previous DeepSeek-V3.1 update, the model supports hybrid thinking, enabling developers to switch between reasoning and non-reasoning modes.

Celebrating DeepSeek-V3.1-Terminus on SambaCloud

Developers who sign up on SambaCloud and join the Developer Tier by adding a payment method to their account will earn an additional $50 in credits to use on DeepSeek-V3.1-Terminus as well as all other models on SambaCloud! That translates to over 30 million FREE tokens of DeepSeek-V3.1– more than enough to finish vibe coding a few different applications. Limited to the first 50 new users.

Why upgrade to DeepSeek-V3.1-Terminus?

DeepSeek-V3.1-Terminus has improved in two key areas and across several benchmarks:

- Language consistency with fewer chinese and english mix-ups, in addition to no more random characters.

- Agentic improvements making it stronger at Coding and Searching performance.

| Benchmark | DeepSeek-V3.1 | DeepSeek-V3.1-Terminus |

|---|---|---|

| Reasoning mode w/o tool use | ||

| MMLU-Pro | 84.8 | 85.0 |

| GPQA-Diamond | 80.1 | 80.7 |

| Humanity's Last Exam | 15.9 | 21.7 |

| LiveCodeBench | 74.8 | 74.9 |

| Codeforces | 2091 | 2046 |

| Aider-Polyglot | 76.3 | 76.1 |

| Agentic tool use | ||

| BrowseComp | 30.0 | 38.5 |

| BrowseComp-zh | 49.2 | 45.0 |

| SimpleQA | 93.4 | 96.8 |

| SWE Verified | 66.0 | 68.4 |

| SWE-bench Multilingual | 54.5 | 57.8 |

| Terminal-bench | 31.3 | 36.7 |

Just like in the previous V3.1 version, DeepSeek-V3.1-Terminus is only capable of function calling in non-reasoning mode and it shows to be improved compared to the previous V3.1 model. This means it performs strongly in coding and will be best suited for coding agents, like in the case of Blackbox. Moreover, DeepSeek has improved the function calling capabilities of this model in non-thinking mode, which make it even better for use with agentic frameworks like CrewAI.

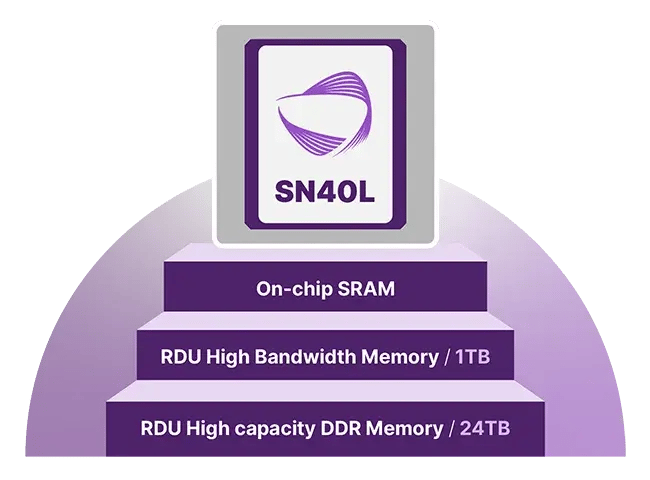

Unmatched Efficiency with SambaRacks

Because the model is stored on chip memory instead of the host memory, we have measured the time to hotswap at an average of 650 milliseconds, which no other system in the world can achieve today. Model bundling and hotswapping with this level of efficiency allows enterprises and data centers to maximize their utilization of each and every rack for inference with our SambaStack and SambaManaged products. This level of performance is required for many applications, especially for cloud service providers that are dynamically managing AI inference workloads in real-time across many racks.

Start building with relentless intelligence in minutes on SambaCloud

SambaCloud is a powerful platform that enables developers to easily integrate the best open-source models with the fastest inference speeds. Powered by our state-of-the-art AI chip, the SN40L, SambaCloud provides a seamless and efficient way to build AI applications with fast inference. Get started today and experience the benefits of fast inference speeds, maximum accuracy, and an enhanced developer experience, in just three easy steps!

- Head over to SambaCloud and create your own account

- Get an API Key

- Make your first API call with our open AI compatible API

import openai

import cmath

import random

import json

# Define the OpenAI client

client = openai.OpenAI(

base_url="https://api.sambanova.ai/v1",

api_key="YOUR SAMBANOVA API KEY"

)

MODEL = 'DeepSeek-R1-0528'

def get_weather(city: str) -> dict:

"""

Fake weather lookup: returns a random temperature between 20°C and 50°C.

"""

temp = random.randint(20, 50)

return {

"city": city,

"temperature_fahrenheit": temp

}To learn more about how to use the model and how to use it in various frameworks, developers can read more on our documentation portal. This includes documentation using function calling with integrations into coding assistants like Cline.