The challenges of deploying AI at scale are driving up costs and complexity, resulting in failed initiatives, robbing organizations of the benefits of AI, and squandering the resources spent. This is a direct result of the wide range of models that are deployed to power all of the AI applications and initiatives within an organization. Many of those applications will be mission critical, requiring high-speed inference and absolute availability.

Costs need to be managed, but far too often models have to be deployed at the rate of one model per system. The imbalance in model queries, meaning that some models receive constant user queries while others are used infrequently, exacerbates this issue. Frequently used models struggle to meet user demand, resulting in poor performance that stifles user adoption. Rarely used models consume the same resources, but use them sporadically with their compute resources sitting idle much of the time.

Model bundling — a capability only available through the SambaNova platform — solves these challenges by combining multiple models into a bundle and leveraging the unique SambaNova architecture to hot-swap models in milliseconds. Model bundles are optimized for SambaRack and allow seamless dynamic load balancing across a cluster of racks. This delivers lightning-fast inference on the best open-source models, such as DeepSeek and gpt-oss. Not only does it meet the most demanding performance needs, model bundling reduces the compute requirements needed to run models and helps to control costs. Complex reasoning models and agentic AI workflows benefit from the increased aggregate performance and high availability in real-world, multi-user environments.

The power of the SN40L RDU

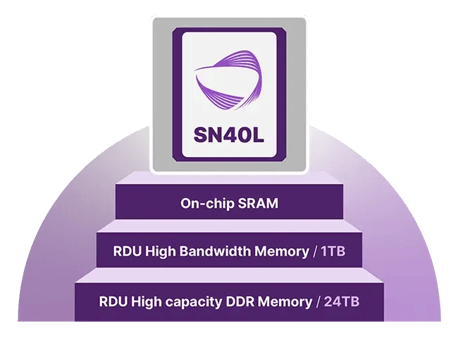

Key to the model bundling capability of the SambaNova platform is the architecture of the SambaNova SN40L Reconfigurable Dataflow Unit (RDU).

Among the innovations of the RDU is a three-tier memory architecture. This memory design includes up to 24 TB of high-capacity DDR DRAM, 1 TB of High Bandwidth Memory (HBM), and 8 GB of high-speed SRAM per node. This large memory footprint enables the RDU to both hold very large models, as well as a large numbers of models, and to run them with very high performance.

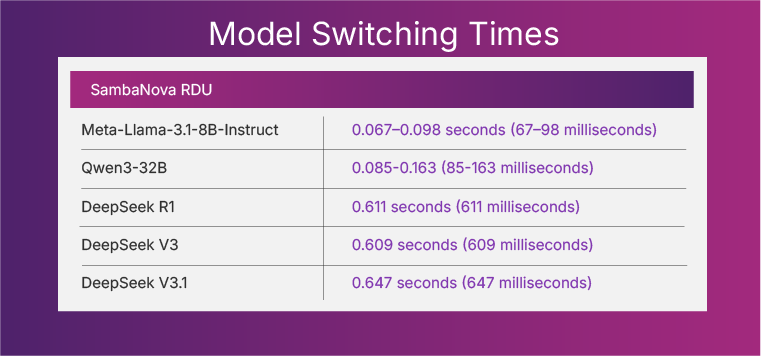

With the capability to hold models in HBM and rapidly process them in SRAM, the SN40L RDU is capable of switching models extraordinarily fast. Smaller models, such as Meta Llama 8B, can be switched out with other models in as little as 94 milliseconds. In addition, smaller models, like the Meta-Llama-3.3-70B and Meta-Llama-3.2-1B, are bundled together for speculative decoding, which optimizes inference by using a small model to draft a potential response to a query that is then verified by a larger model.

Reasoning models, such as Qwen3-32B, can be swapped out in as little as 85 milliseconds and bundled with Whisper-Large-v3 and E5-Mistral-7B-Instruct. Larger models, such as the DeepSeek variants, can be bundled together and switched out in less than 650 milliseconds. Larger models such as DeepSeek and gpt-oss-120B are available in a range of context lengths.

Fast model switching is particularly important for agentic workflows, where rapid model switching is essential. In an agentic workflow, there is a planner model that outlines the steps that are necessary to perform the task and identify the required models. The selection of models in the execution phase is highly variable and unpredictable. This unpredictability makes the ability to switch between models quickly a vital component of any agentic process. The RDU’s model switching speed ensures seamless, real-time performance.

How model bundling works

With SambaNova, a group of models, for example all four of the latest versions of DeepSeek — DeepSeek-V3-0324, DeepSeek-R1-0528, DeepSeek-V3.1, DeepSeek-V3.1-Terminus — can run on a single rack. The SN40L RDU enables fast switching between models as needed. SambaNova model bundling extends this capability across multiple racks within a single cluster, which provides several benefits.

For example, in a SambaCloud four-system cluster, all four of the DeepSeek models run on all four of the SambaNova systems. Each system is capable of responding to requests, so the system capable of responding the fastest handles each request as it is received. In this example, two systems could be responding to mission-critical applications, another could be handing lower priority batch requests, and the final system could be handing other ad hoc user requests. This will change dynamically to reflect the rapidly changing profile of requests that naturally occur within large organizations.

Scaling from four to eight models is simple, as the models can all run on the same four systems. If additional resources are required, the entire cluster can scale as needed, one system at a time.

Mission critical workflows that require high availability benefit as each rack in the cluster is running all of the models in the bundle. In the event of a disruption on one rack, the remaining systems continue to operate without interruption. Without this capability, additional redundant systems would be required to achieve high availability, which would increase costs and complexity without improving efficiency.

In a model bundle, multiple models are grouped in a “bundle” and loaded onto the SambaNova system in a cluster. Each system in the cluster runs all of the models in the bundle. All of the systems in the cluster then work together to provide a combination of performance and resource utilization that is not possible with other architectures.

Why model bundling matters for multi-user cloud environments

Model bundling is particularly beneficial to environments with multiple users in the cloud. Model performance is often measured in terms of a single user, but single-user environments are rare.

Organizations typically support multiple users, all accessing different models or groups of models. These highly dynamic environments have a high variability in user prompts. Often, there are a few models with use more and a larger number of models used less frequently. On SambaCloud today, the model or models used predominately can be held in the HBM of SambaNova systems for high speed, low latency access and large batch sizes. Models utilized less frequently can batch and process user requests together. By batching long tail models together, overall cluster utilization can be greatly increased, and overall performance can be increased.

In any large environment, there will be a high degree of variability in the models being accessed at any given time. Invariably, there will be some models that receive higher usage than others. When models are experiencing high usage, additional systems in the cluster can serve them, while models with lower utilization can be served by the remaining systems. Since the workload is shared across multiple systems, the model queue times are reduced with higher activity.

The future of AI workloads demands model bundling

As AI applications grow in complexity — especially with agentic workflows — the ability to efficiently serve multiple models becomes a competitive advantage. SambaNova’s model bundling delivers:

- Higher utilization (no stranded GPU resources)

- Lower latency (millisecond model switching)

- Simpler scaling (add models without adding hardware)

- Built-in high availability (no redundant systems needed)

For enterprises deploying large-scale AI, model bundling isn’t just an optimization — it’s a necessity.