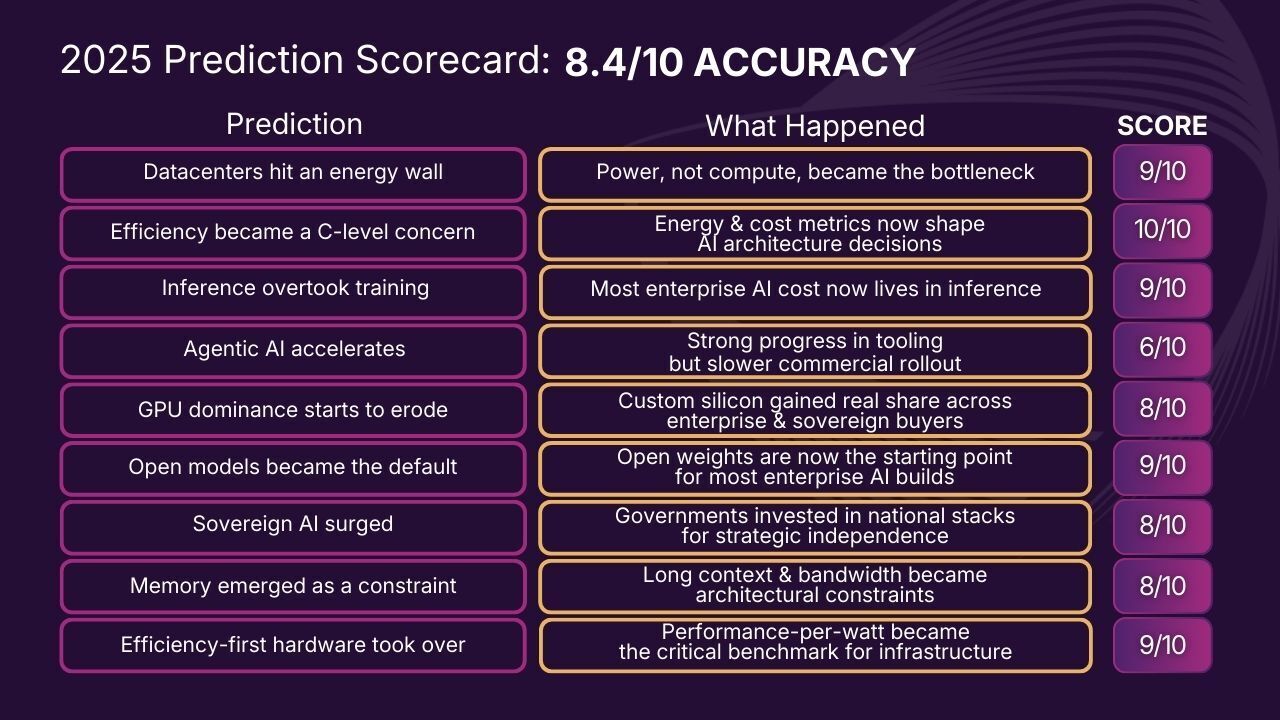

At the beginning of 2025, SambaNova published nine predictions about the future of AI — from power constraints and the rise of inference, to the shift toward open models and sovereign infrastructure.

A year later, those shifts are not only underway, they're accelerating. Inference now drives real-world cost. Power, not compute, is the new bottleneck. And control – not just capability — is shaping AI infrastructure decisions.

Here’s what happened in 2025, what we got right, and insights for 2026.

1. Inference Moved to Center Stage

2025 was the year enterprise AI became a deployment problem. Inference — not training — absorbed the majority of cost and complexity. Every prompt, every decision, every customer-facing task is an inference workload. And at scale, inference runs continuously — not just in large training bursts.

The infrastructure response: optimize for speed, memory, latency, and energy — not just peak FLOPs.

2026 Takeaway:

Enterprises that focus on inference with existing open-source models over training new ones will win on delivering more productivity, better customer experience, and scaling to meet growing demand.

2. Power Replaced Compute as the Bottleneck

In 2025, the limiting factor wasn’t chips — it was electricity. As demand surged, utilities in key markets began treating large AI clusters as industrial loads. Permitting, generation, and grid capacity couldn’t keep pace. Power allocation — not GPUs — became the gating factor for new AI-scale deployments.

KPIs like “intelligence per joule” and “tokens per watt” became critical to infrastructure planning. The most advanced models couldn’t run if the power wasn’t available.

2026 Takeaway:

AI hardware that can’t run efficiently, or be placed where energy is accessible, won’t scale.

3. Open-Weight Models Trending with Enterprise

Open-weight models — like LLaMA, DeepSeek, gpt-oss-120b, and others — matured into core components of enterprise AI stacks. Enterprises chose open models not just to reduce cost, but to gain control. They could fine-tune on proprietary data, deploy in-region, and avoid the constraints of proprietary APIs, from usage limits to compliance risks.

As open ecosystems evolved, these models became the foundation for everything from internal GPTs and domain-specific copilots to sovereign national AI systems.

2026 Takeaway:

Open models aren’t a workaround. They’re infrastructure. Enterprises now start with open — and expect to build on top of it.

4. Sovereign AI Became an Infrastructure Priority

Governments followed the same logic — at a national scale. From India and the Gulf to Europe and Japan, sovereign AI moved from whitepapers to budgeted, strategic investments. The goal: Ensure control over training data, model alignment, infrastructure, data control, and deployment geography.

SambaNova saw this shift directly in projects with national labs and corporations building custom models in secure, energy-efficient, sovereign environments.

2026 Takeaway:

Sovereign AI is no longer a theory. It’s a live infrastructure strategy for governments and critical industries.

5. The Hardware Stack Began to Flip

Inference became the dominant workload. But the legacy hardware stack wasn’t built to efficiently run inference. While GPUs remain essential, power and liquid cooling requirements made them increasingly inefficient for production-scale inference. Custom silicon entered the mainstream — designed specifically for high-throughput, long-context workloads.

SambaNova’s RDU, for example, runs frontier-scale models in a single rack with a fraction of the power draw of comparable GPU clusters. Architectures like this are now being adopted in sovereign, enterprise, and research settings alike.

2026 Takeaway:

The next wave of AI infrastructure will be inference-native, not GPU-first.

6. Agentic AI Advanced – But with Limits

Agentic AI made technical strides in 2025, but most real-world deployments didn’t match the hype. Where agentic systems were successful in improving productivity, they focused on tightly scoped tasks — financial workflows, customer support, coding — and relied on modular design, clear orchestration, and human oversight.

Fully autonomous “AI employees” proved to be more vision than reality. Agents that worked well resembled overconfident interns: capable, but best when supervised.

2026 Takeaway:

Agentic AI will thrive when integrated into structured, observable workflows — not as standalone replacements.

In 2025, the predictions stopped being forecasts and turned into operating conditions: Power really did beat compute; inference really did swallow the bill; open and sovereign really did become the default; and efficiency really did decide who could scale.

The 2026 takeaways are the response playbook to that reality: design workflows, not magic agents; treat data and process IP as the moat; optimize for real-world performance, not lab scores; and architect for open and sovereign from day one. The businesses that pull ahead now will be the ones that go fully AI-native — rebuilding around these lessons instead of bolting AI onto legacy systems — and leaving everyone else to discover, the hard way, that the future was hiding in last year’s “predictions” all along.

The Bottom Line

2025 marked a strategic shift in AI infrastructure. Training took a backseat and inference became the cost center. Power became the limiting resource. Open models became the baseline. And a new hardware and software stack began to emerge, built not just to train large models, but to run them efficiently, reliably, and everywhere.

In 2026, the winners won’t be the ones who build the biggest model. They’ll be the ones who run it best.