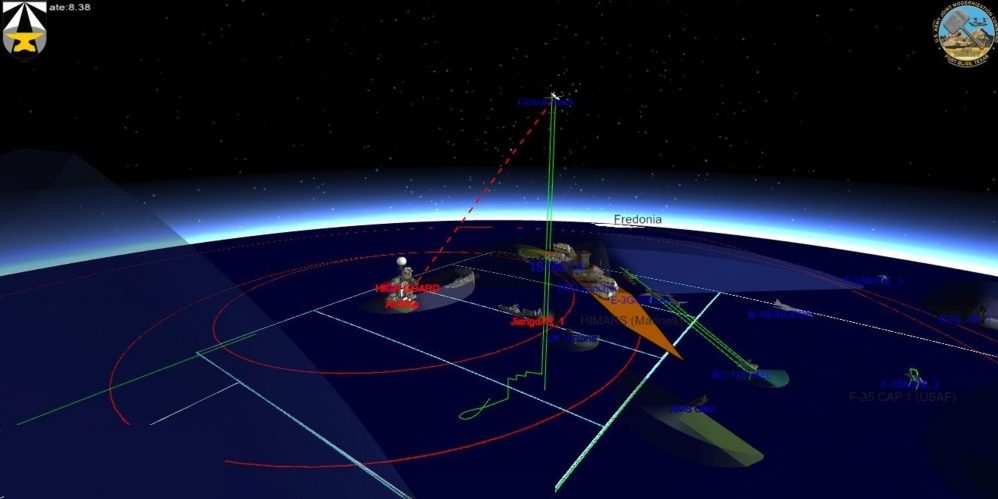

Thanks to the efforts of the Department of Defense, military services can now share data and rely on information from the Joint All-Domain Command and Control (JADC2) network. JADC has made data silos and stovepipe systems finally less of an obstacle, allowing information to be shared with less effort. Intelligence, surveillance, and reconnaissance data can all be combined and processed by artificial intelligence systems to create more awareness, identify targets, and produce useful sensor-to-shooter results for the war fighter that could save lives and ensure mission success.

This tactical and strategic intelligence depends heavily on sensor data provided by the JADC2 network and other sources. The volume and complexity of this data precludes human processing; it must be done by machine. Artificial intelligence and machine learning algorithms have proven to be the essential part of the JADC2 data processing toolbox, but the state of conventional AI/ML systems still leaves a lot to be desired when it comes to the trade-off between processing speed and accuracy.

Machine learning is an application of artificial intelligence in which a cluster of computer systems run thousands of simple classification algorithms across a corpus of pre-processed data. After processing this corpus, ideally a machine learning system can handle new inputs and return correct analyses.

One challenge with machine learning systems is that each of the individual nodes in a machine learning cluster must run its algorithmic analysis on the same piece of data. This means that when a 4k image – or up to a 50k image – is being sent through an ML system, it is not being processed once by 1,000 CPUs, but 1,000 times simultaneously.

While this produces results that are suboptimal in terms of accuracy and speed, it is also slow. Unfortunately, especially with image processing, this is where the state of conventional ML systems is currently suffering. Existing CPU and GPU architecture is just not up to the challenge of processing large images.

TRAINING WITH LARGE IMAGES IS DIFFICULT

Image capturing technology is outstripping conventional ML systems’ ability to process image data. In order for image processing results to be directly useful at the tactical edge, they must be both accurate and timely. Both are vital, but unfortunately there is always a trade-off between the two. Ultimate accuracy can take as much time as it wants, while ultimate speed results in less accurate – and thus less useful – results.

Currently, GPU-based ML systems are topping out at images that are around 2,000×2,000 pixels. While this might seem like an impressive size, satellite imagery is delivering images with a resolution of 50,000×50,000 pixels! Processing images of this size to provide the needed accuracy – within time constraints – is beyond the capabilities of even the best GPUs.

Two different approaches are commonly used when processing images of this size: downscaling, and tiling. Both have their limitations.

Downscaling

Large resolution images can be reduced in size to make them more manageable. This process, called downscaling, uses clever mathematical algorithms to combine pixel data in an attempt to make a smaller resolution image that maintains as much of the original image’s information as possible.

Unfortunately, current downscaling algorithms result in data loss. And as image resolution is decreased, the amount of available information for the ML system is decreased. This has the result of sacrificing a large amount of accuracy in order to increase speed.

Tiling

By splitting one image into large images, you can spread out the work of image processing among multiple ML systems. This splitting process is called tiling, and on the surface it seems to be a good workaround for the limitations of GPU-based ML systems. Essentially, this approach divides one large project into multiple jobs, then addresses each job in parallel using more and more AI systems.

There are limitations to the tiling method that even more computing nodes cannot solve, though. The process of tiling uses an algorithm to split a larger file into a number of smaller files, or tiles. The edges of these tiles tend to lose information, which means that when tiles are recombined into larger images again, information is lost.

Adding more computing nodes produces new challenges, as well. More computers requires more people, more time, and creates more points of failure. As clusters of AI systems become more complex, adding on to those systems takes more time. Even with well-designed clusters of conventional nodes, a tiling solution rapidly approaches the limits of system complexity and speed.

HANDLING MULTIPLE INPUTS

While JADC2 is a critical component of tactical and strategic awareness, information can be arriving from multiple diverse sources at any moment. Much of this information can come from surprising or ad hoc sources. For example, drone video, radar imagery, or vehicle dashboard cameras might all deliver relevant intelligence that can help build a better and more comprehensive tactical view.

ML systems need to be able to incorporate this additional data into existing JADC2 data. Multiple inputs are particularly critical at the tactical edge, where forward-deployed military forces need to incorporate up-to-date sensor information rapidly and often. One of the challenges this presents is that while JADC2 data consists primarily of high resolution satellite imagery, additional inputs could be video or audio. This requires the ability to infer, process, and incorporate disparate spatial information to create a better model of current tactical situations.

These inputs present a particular challenge when existing ML architecture is already pushed to the limits of its processing power. If GPUs are already constrained to 2k imagery, the processing demands needed by additional video and imagery inputs will either decrease accuracy or increase processing time beyond useful limits.

THE PATH FORWARD

Ultimately, a platform can only provide so much computing power. We are running up against the limits of what CPU- and GPU-based architectures can provide for AI solutions. As we are faced with an ever-growing, increasingly complex matrix of sensor-generated data, we need to carefully evaluate what a next-generation AI platform will look like.

The next generation of AI platforms needs to be designed for AI from the ground up. Hardware and software should be well-integrated and optimized for AI and ML from the silicon all the way up to the user interface. These next-generation systems need to address bottlenecks in legacy core-based systems, particularly in the areas of data caching and excess data movement across both storage and memory. In addition, tomorrow’s platform needs to be modular and easy to expand.

Fortunately for the world of AI, such systems have been under development for some time, and are now being utilized in cutting-edge applications. Using image processing and other ML methodologies on JADC2 and other sensor inputs to provide critical awareness and intelligence at the tactical edge is just one of many fields where next-generation approaches can make a difference.