AI is a transformative technology that will impact every facet of our lives. To achieve this, legacy solutions that have powered AI so far are being replaced with a new architecture designed for the requirements of AI.

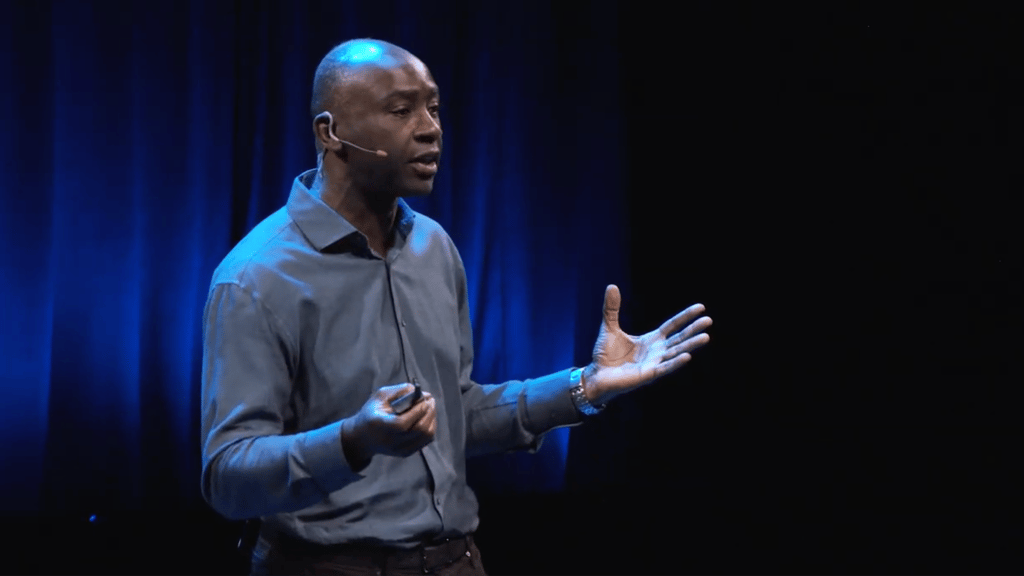

SambaNova Co-Founder Kunle Olukotun’s keynote address at SC21, The International Conference for High Performance Computing, Networking, Storage, and Analysis, shared how letting the data flow enables AI to become the transformative technology to usher in a true AI enabled world.

One of the primary challenges with delivering on the potential of AI and ML systems has been the exponential rate at which AI models have been growing. AI model size and compute requirements have been doubling every two and a half months. Today’s AI models include trillions of parameters and terabytes (TBs) of data, and they will only grow from here.

The systems powering AI applications struggle with these models today. How will they handle them tomorrow?

Existing CPU and GPU based architectures do not provide the ability to support this development at scale. And the problem is built into their architecture.

CPUs are good at AI Inference, which uses smaller datasets, but they simply cannot process the massive volumes of data required for AI Training. GPUs are used for model training, as they are good at parallel processing, but their limitations, which includes the amount of memory per GPU and an inefficient usage of main memory for processing, has resulted in organizations using hundreds or even thousands of GPUs to train models and still falling short of expectations.

Further, the inherent inefficiency of using GPUs for training and CPUs for inference, has only compounded the problem. This is why Kunle discussed the need for convergence, where a single platform can be used for training and inference.

Kunle explained how SambaNova solves all of these challenges and provides organizations the ability to achieve goals they did not think was possible. One example of this is in computer vision, where GPUs struggle with 4K x 4K images, while the SambaNova solution can handle images that are 40K x 60K with ease.

Optimized for dataflow, the SambaNova Reconfigurable Dataflow Architecture (RDA) maps parallel patterns across an array of reconfigurable compute, memory, and communication. This design exploits both locality and parallelism.

Ultimately, Kunle presented the details on how SambaNova is delivering a true, next generation solution that overcomes the limitations of CPU and GPU based architectures to power the AI enabled future.