We are pleased to announce that SambaStudio now supports text embedding models. This new feature significantly boosts the information retrieval capabilities of the SambaNova Suite and enhances large language models (LLMs) with Retrieval Augmented Generation (RAG) capabilities. RAG improves base LLMs on various aspects including factuality, reduced hallucinations, and more by providing correct context to the LLM. This functionality brings benefits to our customers and elevates the efficacy of the model.

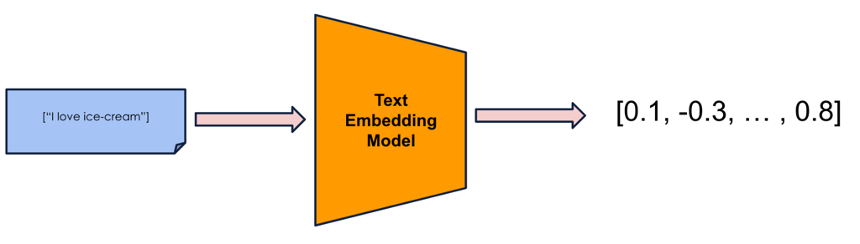

The text embedding models convert a piece of text to a vector in d-dimensional vector of real numbers as illustrated in Figure 1.

Figure 1: A text embedding model converts text into an d-dimensional embedding vector

The vectors obtained via embedding models are such that text with similar meanings are clustered together and text with different meanings are far away from each other. This makes text embeddings ubiquitous in information retrieval systems such as search, recommendation etc. The texts are converted to embedding vectors and similar looking texts to given text input can be found by looking at the closest vectors to the given text input.

More specifically, we are starting with e5_large_v2 – a versatile text embedding model that is highly performant on various use cases of text embedding. It is one of the top ranked open source models on the Massive Multitask Embedding Benchmark.

The embedding model support on SambaNova Studio comes with offline batch inference mode as well as online inference API endpoint. The offline batch mode can be used to construct approximate nearest neighbor (ANN) indexes or vector databases from existing document corpus. Whereas, the online inference API endpoint allows for embedding a piece of text in the request path and retrieving relevant documents.

With these two capabilities our customers can leverage the symbiotic relationship between information retrieval and LLMs to build powerful applications. At SambaNova, we are committed to delivering cutting-edge generative AI technology to empower our customers. The addition of embedding model support is a key stepping stone in that journey.

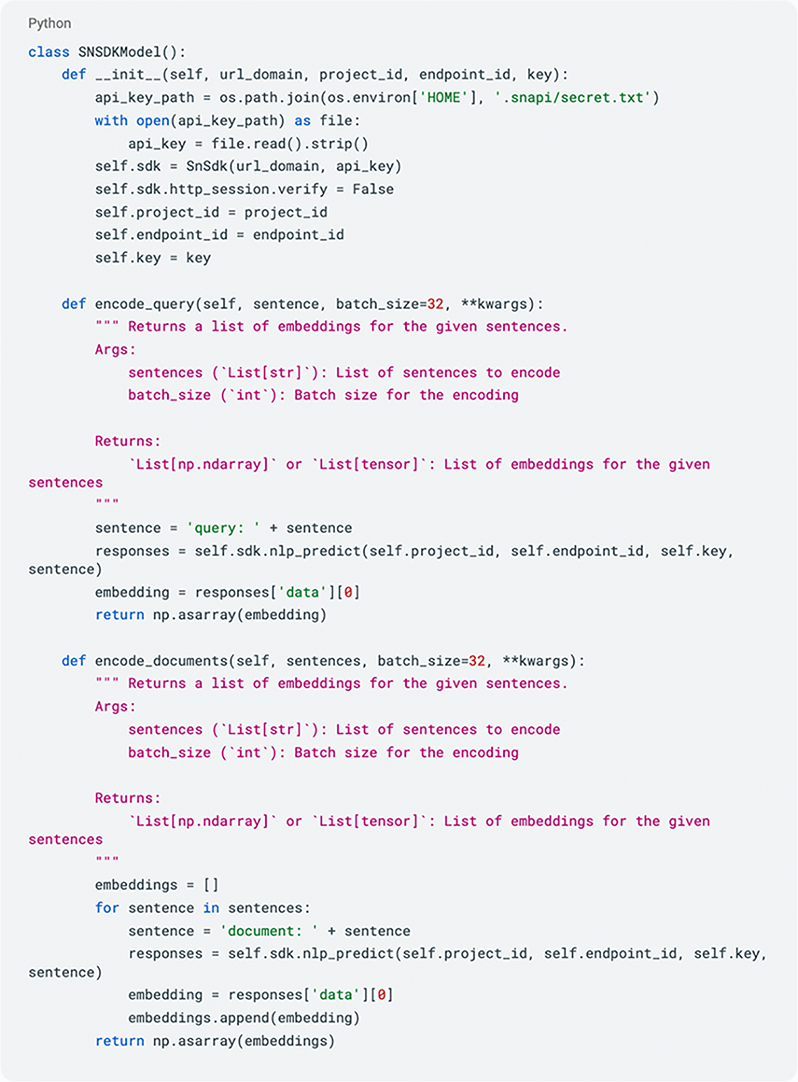

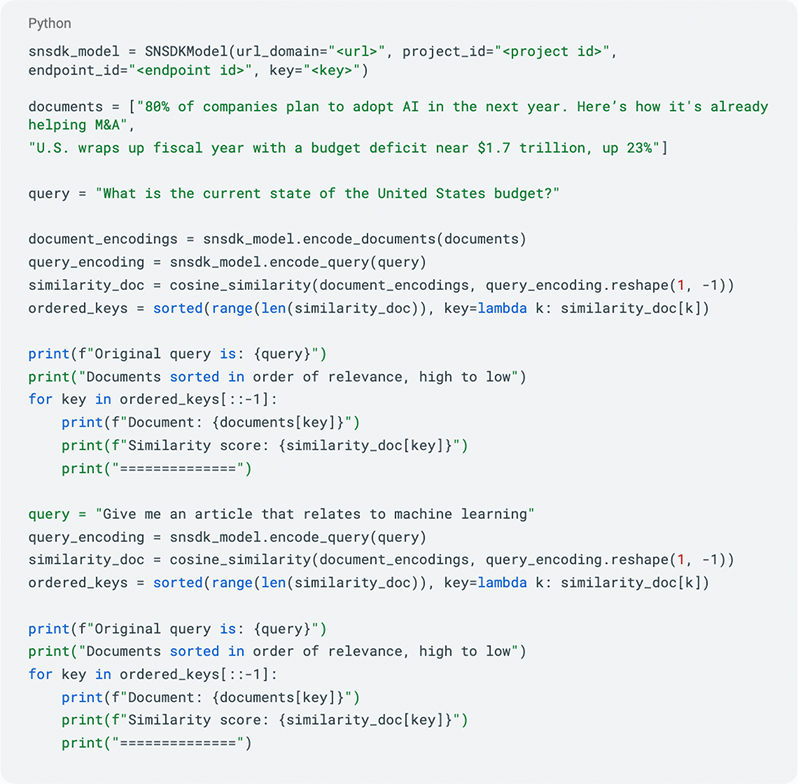

Code snippet to use embedding endpoint on SambaNova Studio:

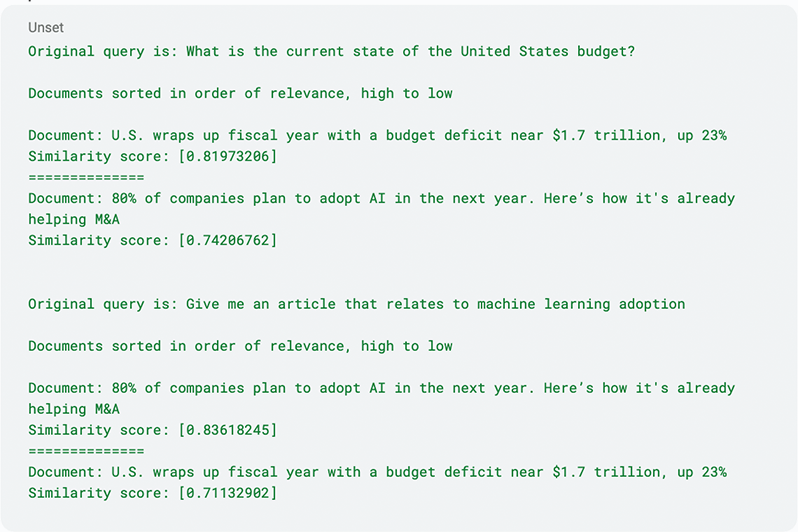

Output:

We are excited to announce text embedding model support and Retrieval Augmented Generation (RAG) capability on SambaNova Studio.

We look forward to what our customers will create using this brand new capability. More embedding models will be added to our platform in future. Stay tuned !!

These two capabilities are key to enabling RAG in LLMs. Our customers can now chain vector databases or ANNs indexes constructed to the family of LLMs already supported on our platform.