SambaStack

The most efficient full-stack fast AI inference

Dedicated AI infrastructure simplified

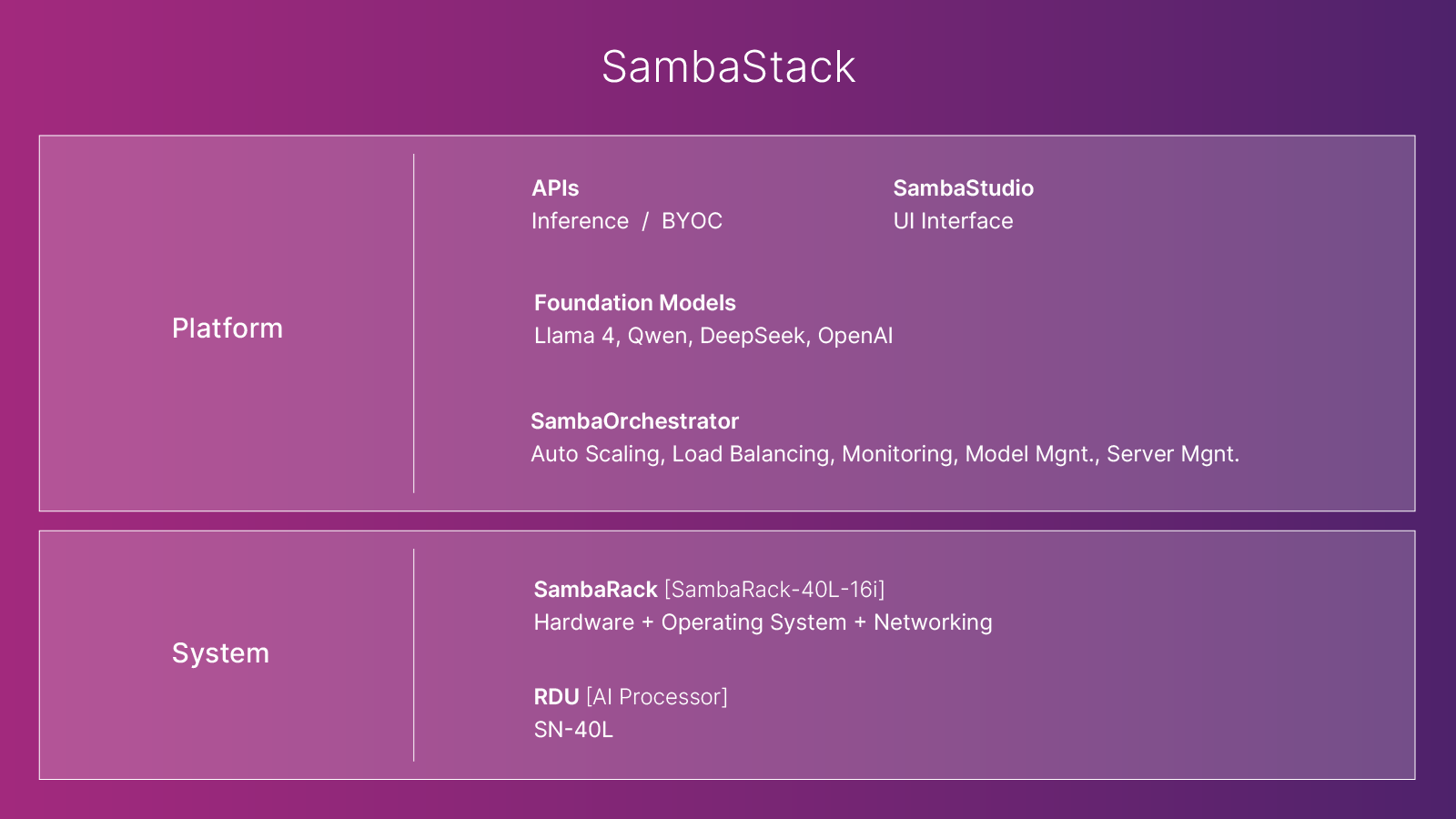

SambaStack offers the industry’s leading hardware and software stack, purpose-built for AI inference. With the flexibility to deploy on-premises or in the cloud, organization are empowered to accelerate their AI innovation with dedicated SambaNova infrastructure.

Chip-to-model intelligence

More AI, less hardware

SambaStack is powered by SambaRack, the most efficient rack for AI using just an average of 10 kW of energy. Run the best AI models with a smaller footprint and lower energy costs.

Learn more → More AI, less hardwareThe best performance on the best models

SambaStack delivers the fastest inference (in tokens/second) on the best AI models, including DeepSeek R1 671B, Llama 4 Maverick, and OpenAI Whisper.

Learn more → The best performance on the best modelsTurnkey private deployments

Our private deployments are designed for speed and performance. Get your data center up and running in weeks, and start processing millions of tokens per second without delay.

Learn more → Turnkey private deploymentsThe most efficient full-stack AI inference solution

SambaStack scales to meet your AI demands.

Deploy purpose-built AI hardware on-premises or with dedicated hosting in the cloud.

Meet the best chip, purpose-built for AI

At the heart of the stack is the Reconfigurable Dataflow Unit (RDU). RDU chips are purpose-built to run AI workloads faster and more efficiently than any other chip on the market.

Related resources

SambaNova Launches First Turnkey AI Inference Solution for Data Centers, Deployable in 90 Days