Achieving Best-in-Class Large Language Model Accuracy in Low-Resource Settings

The opportunity: solving a range of language tasks using large language models with zero and few-shot learning

Recent advances in AI have allowed large language models (LLMs) to deliver very impressive capabilities with zero-shot and few-shot learning. These approaches enable impressive versatility, giving LLMs the potential to transform every aspect of an enterprise. As a result we see a race in the LLM space to compete on and improve the zero-shot and few-shot accuracies across a wide variety of tasks to deliver value.

The challenge: achieving consistent accuracy with zero-shot and few-shot learning

These capabilities however are not enough to ensure the adoption of LLMs in enterprise scenarios. Our work with our customers has shown that while zero-shot and few-shot capabilities showcase a LLMs potential to solve a downstream use case, the accuracy achieved using these capabilities is inconsistent. The achieved accuracy can be a function of the prompt or examples used to generate the answer from an LLM. For example [1] showed that for a binary sentiment analysis task (SST-2), depending on the examples used in the few-shot learning, accuracy of the model can vary from random chance (54%) to near state of the art (94.3%). This inconsistency and variance is also very well documented in academia [1][2][3].

Further fine-tuning the LLM with labeled data is an effective method to address the inconsistency challenge mentioned above. However, in real world situations, good quality task specific labeled data is often fairly limited. Being able to achieve not only state-of-the-art, but also consistently good accuracies despite the low-resource labeled data limitation, is the key to enable enterprise adoption.

SambaNova’s approach towards enterprise enablement

To enable consistent accuracy in low-resource settings, SambaNova utilizes a 2-stage approach:

- Pre-training on a high quality, diverse dataset curated using a data-centric approach

- Implementing a tuning pipeline that combines the generalization capability of parameter efficient techniques in low resource settings with self training

This data-centric approach focuses on collecting a large corpus of diverse datasets from various sources, such as books, news articles, conversations, entertainment, wikipedia, and research articles. These data sources are not just highly varied by type, but also by the structure of the data, such as completely unstructured, prompted, instructions via weak supervision etc. This data-centric approach enables more accurate results when fine-tuning in low resource settings.

One other consideration is that fine-tuning on a small dataset can be challenging and can sometimes lead to overfitting. To avoid this, SambaNova fine-tunes an LLM using a training methodology that incorporates practices like parameter efficient tuning. Accuracy of a model in a low resource setting can be further improved by using advanced techniques like self training – a method to pseudo label an unlabeled dataset. By combining self training with the right methodology, SambaNova ensures our well trained models perform exceptionally well in the low resource enterprise scenario.

SambaNova’s differentiations on pre-trained GPT

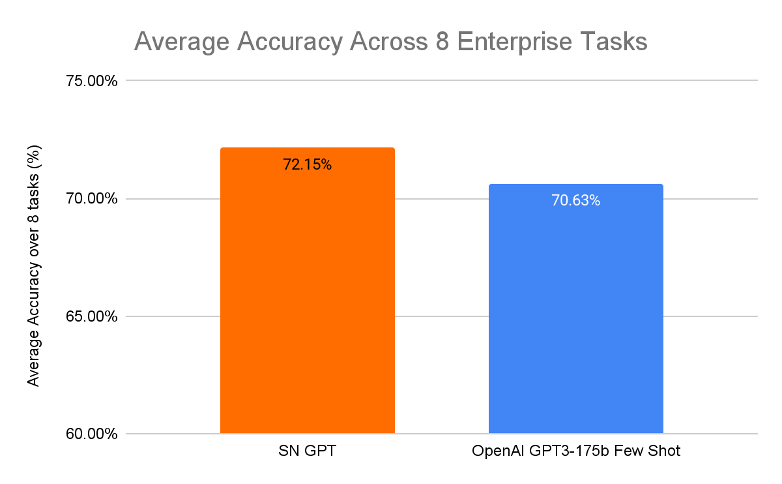

To demonstrate the real world impact of this methodology, SambaNova applies this data-centric approach to a pre-trained and fine-tuned GPT model. We show that SambaNova’s GPT model not only outperforms leading GPT offerings such as OpenAI’s 175b parameter modela, but does so with a model that is multiple orders of magnitude smaller. We benchmark our smaller model against a much larger 175b model from OpenAI across 8 tasks ranging from text generation, question answering, entailment, and text classification in scenarios mimicking the low resource settings required by our enterprise customers. What we observe is that, on average, our product offering can outperform few-shot results from OpenAI 175b model by a margin of 1.5%.

To further test the capabilities of our product, we also evaluated the same smaller model against a fine-tuned 175b model from OpenAI using their fine-tuning APIb,c. We again operate in the low resource setting where we only have 100 labeled examples for each task. What we observe is that we consistently outperform the fine-tuned 175b model anywhere from 1.2% to 8%. For a financial NER task, our model achieves an accuracy of 73.60%, while the fine-tuned 175b model achieves 72.40%. For an entailment task, our model achieves 53.89% while the 175b model achieves 44.58% accuracy.

These results show the extraordinary impact a data-centric approach and a well developed tuning pipeline can have on the quality of a model. Delivering highly accurate pre-trained LLMs is a core focus of SambaNova’s product strategy to help customers to get to value faster.

Conclusion

We show that with a model that’s multiple orders of magnitude smaller, SambaNova GPT model outperforms the leading GPT-175b model in challenging low resource downstream settings, a common enterprise scenario where labeled data is hard to acquire. We achieve this milestone by taking both a data-centric approach towards model development and focusing on developing an advanced training pipeline.

Footnote

[a] We use the paper numbers from OpenAI to ensure that the numbers are truly zero-shot and few-shot. It’s unclear whether the model used by OpenAI’s current API has already incorporated that data in its training corpus

[b] OpenAI’s fine-tuning API does not provide an option to select between davinci-002 or davinci-003, so it’s unclear which version of the 175b model it uses internally

[c] We use the default hyperparameters provided by the fine-tuning API

References

[1] Calibrate Before Use: Improving Few-Shot Performance of Language Models

[2] Multitask Prompted Training Enables Zero-Shot Task Generalization

[3] Do Prompt-Based Models Really Understand the Meaning of their Prompts?