RDU

Reconfigurable Dataflow Units (RDUs) — purpose-built for AI

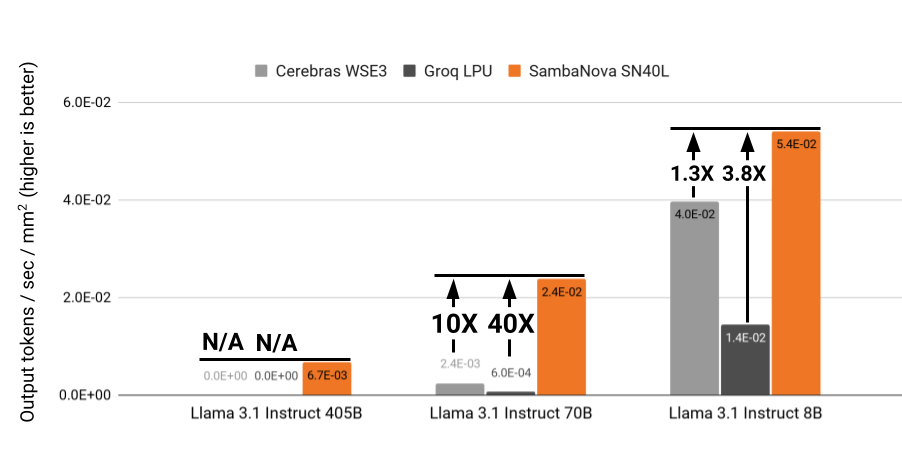

Delivering fast & energy efficient AI inference

Our fourth-generation RDU, the SN40L, is designed to deliver extraordinary performance. Uniquely capable of powering the most demanding workloads, the SN40L does it all with the highest efficiency rate.

Headline here

From chips to racks

The combination of 16 SN40L RDUs creates a single, high-performance rack that can run the largest models, such as DeepSeek R1 671B and Llama 4 Maverick, with the fast inference. These racks can be seamlessly integrated into any existing air-cooled data center.

Learn more →

Seamlessly achieve high performance

From chips to racks

The combination of 16 SN40L RDUs creates a single, high-performance rack that can run the largest models, such as DeepSeek R1 671B and Llama 4 Maverick, with fast inference. These racks can be integrated seamlessly into any existing air-cooled data center.

Learn more →

Dataflow architecture

Our innovative compute and memory chip layout enables seamless dataflow between operations when processing AI models. This approach results in high-speed data traffic and significant gains in performance and efficiency.

Learn more →

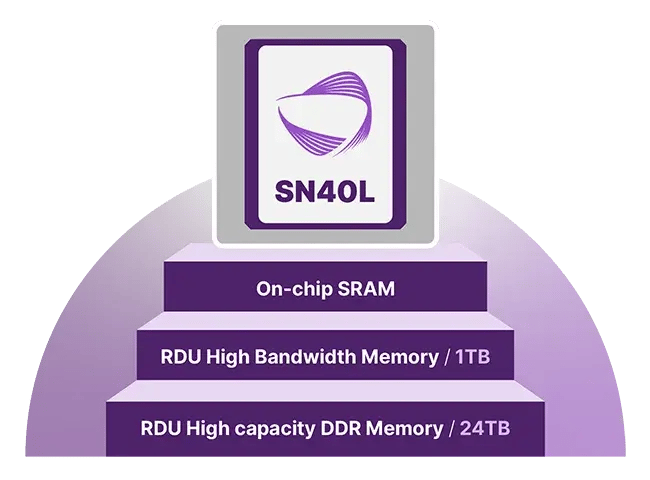

Three-tier memory for efficiency

The SN40L design enables multiple models to run in memory and switch models in microseconds. This unique layout enables SambaNova to scale to the largest models, like DeepSeek and Llama 4 — all on a single rack.

Learn more →Future-proof your infrastructure

Our fourth-generation RDU SN40L is the heart of the SambaNova solution platform.

Speed

RDUs are the only solution that run the largest AI models on a single system with blazing fast performance.

Learn more →

Energy

RDUs deliver the highest tokens per kilowatt-hour, which is ideal for data centers of all sizes.

Learn more →

Agentic

Three-tier memory architecture enables multiple models to run while switching between them. Perfect for AI agents.

Learn more →Related resources